Proposal would force Apple and Google to block explicit images on iOS, Android by default

The U.K., like many other regions, seeks to protect children by forcing iOS and Android phones to block explicit content by default.

Companies like Apple and Google are under pressure from governments around the world to implement age verification tools to prevent children, including teens, from viewing certain content on their mobile devices. For example, the U.K.'s Home Office wants algorithms built directly into iOS and Android that would be able to detect nudity and block it by default on iPhone and Android handsets respectively.

U.K. seeks to protect children from viewing explicit content

Should Apple block explicit content until a user's age is verified??

Yes. Children should not be viewing this.

29.23%

No, I don't want my children's personal data kept by Apple.

70.77%

The U.K. had contemplated making such a system mandatory for all smartphones sold in the market but will, for now, settle on the voluntary acceptance of this plan by manufacturers. The Financial Times reports that this plan to block nude mages on smartphones will be part of a larger strategy to reduce violence against women and girls that the U.K. will soon announce.

In 2021 Apple announced plans to have iPhones scan for child sexual abuse material, a plan it subsequently killed off. | Image credt-PhoneArena

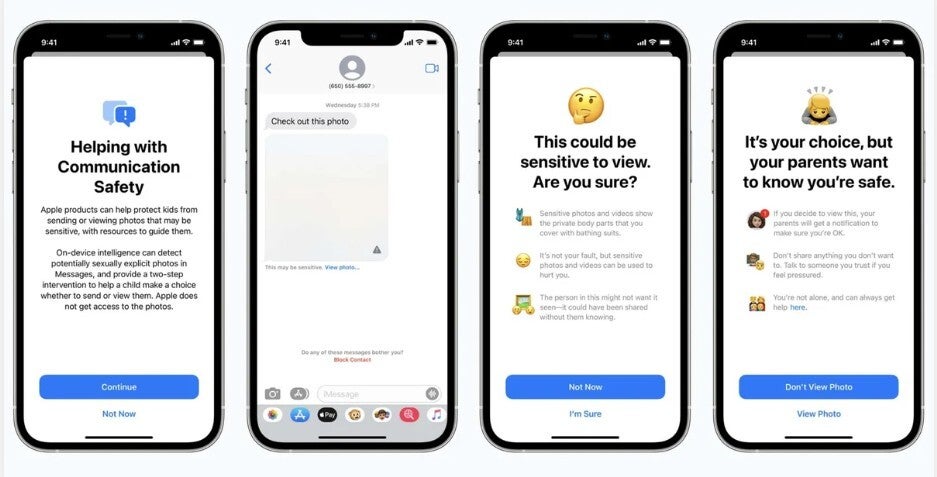

A system-wide nudity block that works on third-party apps by default would be new to iOS. In iOS 26.1, Apple did add filters for users age 13-17 that detect and block sensitive images in FaceTime, Messages, and AirDrop. However, older teens can still view the blocked image after receiving a warning.

Clock running out on Apple's battle to halt new Texas law

Apple CEO Tim Cook has previously warned that having the U.S. government require age verification at the system level would force platforms like iOS and Android to collect personal data. That hasn't stopped one particular state. Starting January 1st in Texas, a new state law (SB2420) goes into effect forcing app marketplaces and developers to follow requirements including one that requires a person in Texas opening a new Apple Account to confirm that they are 18 years of age or older.

Apple says, "While we share the goal of strengthening kids’ online safety, we are concerned that SB2420 impacts the privacy of users by requiring the collection of sensitive, personally identifiable information to download any app, even if a user simply wants to check the weather or sports scores." Apple is part of a group asking the courts to prevent the Texas law from taking effect, stating that it "imposes a broad censorship regime on the entire universe of mobile apps." You might recall that back n 2021, Apple announced a plan to have individual iPhone units scan for child sexual abuse material (CSAM). Apple eventually decided to drop the plan.

Other countries are taking action to prevent children from potentially harmful activities. Australia recently made it illegal for anyone under the age of 16 from using social media. While most platforms are cooperating, Reddit has filed a suit seeking to have the new law tossed.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: