Apple's decision not to roll out its CSAM photo monitoring could be permanent

In August, Apple announced that it would be introducing a collection of features to its iPhones and iPads in an attempt to limit the spread of Child Sexual Abuse Material (CSAM). With the new iOS 15.2 update, Apple released its new child safety features but didn’t release its CSAM photo monitoring feature.

Apple’s CSAM photo monitoring feature was postponed in September due to the received negative feedback. The statement announcing this was posted on Apple's Child Safety page; however, all information on Apple's CSAM photo monitoring tool and the statement itself have been removed at some point on or after December 10, reports MacRumors.

By removing all the information about its CSAM photo tracking tool from its website, Apple may have openly abandoned this feature.

After the announcement was made in August, numerous individuals and organizations criticized Apple's newly announced features for its mobile devices.

The most common issue leveled at Apple was the way its CSAM picture monitoring feature would work. The idea of Apple was to use on-device intelligence to match CSAM collections stored in iCloud. If Apple had detected such CSAM material, it would have flagged that account and provided information to the NCMEC (National Center for Missing and Exploited Children).

According to researchers, Apple would use technology similar to spying, and that this same technology is useless at identifying CSAM images. By testing Apple's CSAM photo monitoring feature, the researchers stated that someone could avoid detection by slightly changing the photo.

Although Apple tried to reassure the users that the technology works as intended and is completely safe, the disagreement stayed.

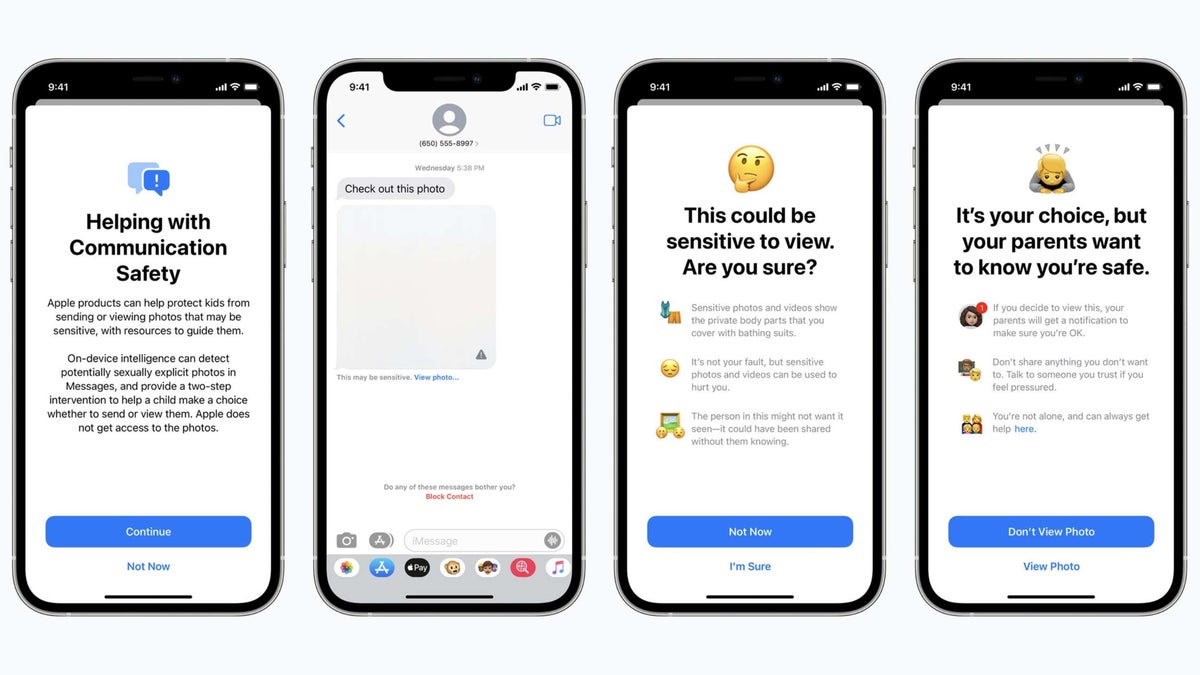

With the iOS 15.2 update, Apple launched some of its child safety features. With iOS 15.2, the iMessage can now warn children when receiving or sending photos with nudity in them. When the child receives or sends such content, the photo will be blurred, and iMessage will display a warning.

After the announcement was made in August, numerous individuals and organizations criticized Apple's newly announced features for its mobile devices.

The most common issue leveled at Apple was the way its CSAM picture monitoring feature would work. The idea of Apple was to use on-device intelligence to match CSAM collections stored in iCloud. If Apple had detected such CSAM material, it would have flagged that account and provided information to the NCMEC (National Center for Missing and Exploited Children).

Although Apple tried to reassure the users that the technology works as intended and is completely safe, the disagreement stayed.

With the iOS 15.2 update, Apple launched some of its child safety features. With iOS 15.2, the iMessage can now warn children when receiving or sending photos with nudity in them. When the child receives or sends such content, the photo will be blurred, and iMessage will display a warning.

In iOS 15.2, Apple has also added extra guidance in Siri, Spotlight, and Safari Search, providing additional resources to assist kids and their parents to stay safe online.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: