NVIDIA executive says rule of thumb related to chip performance is dead

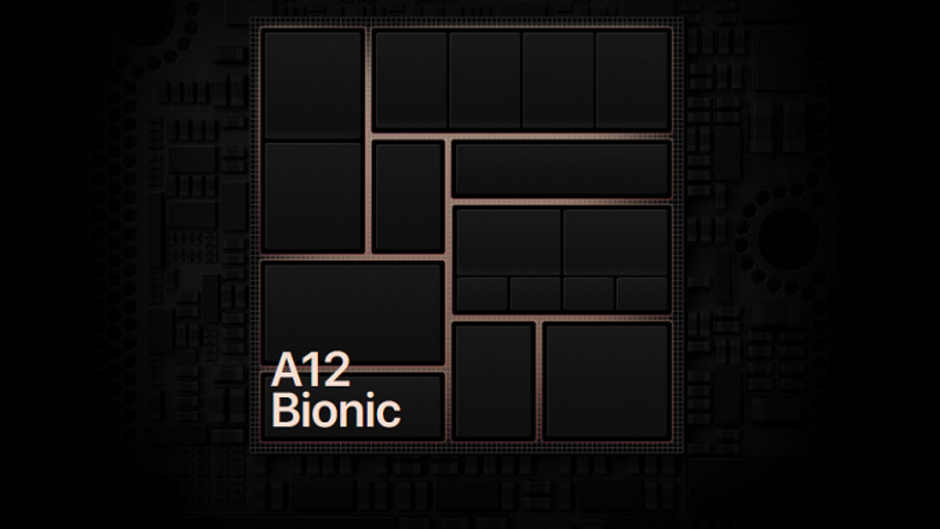

Created by by Intel co-founder Gordon Moore in 1965, the rule of thumb known as Moore's Law originally called for the number of transistors inside an integrated circuit to double every year. In 1975, the Law was revised. That year, Moore said that the number of components inside an integrated circuit would double every year until 1980. After that, the doubling would take place every other year. The improvements and innovations in the performance of mobile tech devices, including the Apple iPhone and Samsung Galaxy devices, depended on this Law continuing to be in force.

During a Q&A session at the Las Vegas Convention Center today, Huang said that Moore's Law has dropped off from a 10X improvement every 5 years to where the improvement is currently only 2X over ten years. The expense and complexity of continually cramming more transistors into a small area makes it difficult to forecast the doubling of chip performance on a regular basis.

"Moore's Law used to grow at 10X every five years [and] 100X every 10 years. Right now Moore's Law is growing a few percent every year. Every 10 years maybe only 2X. ... So Moore's Law has finished."-Jensen Huang, co-founder, CEO, NVIDIA

Analyst Patrick Moorhead of Moor Insights & Strategy said, "Moore's Law, by the strictest definition of doubling chip densities every two years, isn't happening anymore. If we stop shrinking chips, it will be catastrophic to every tech industry." But he notes that there are other ways to improve the performance of devices that relied on Moore's Law, including new advances in software, and new ways to package chips.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: