Copilot has been reading your emails for weeks without your consent - what now?

Do you really think your confidential emails are actually confidential?

Microsoft 365 Copilot Chat logo. | Image by Microsoft

Privacy? What privacy?

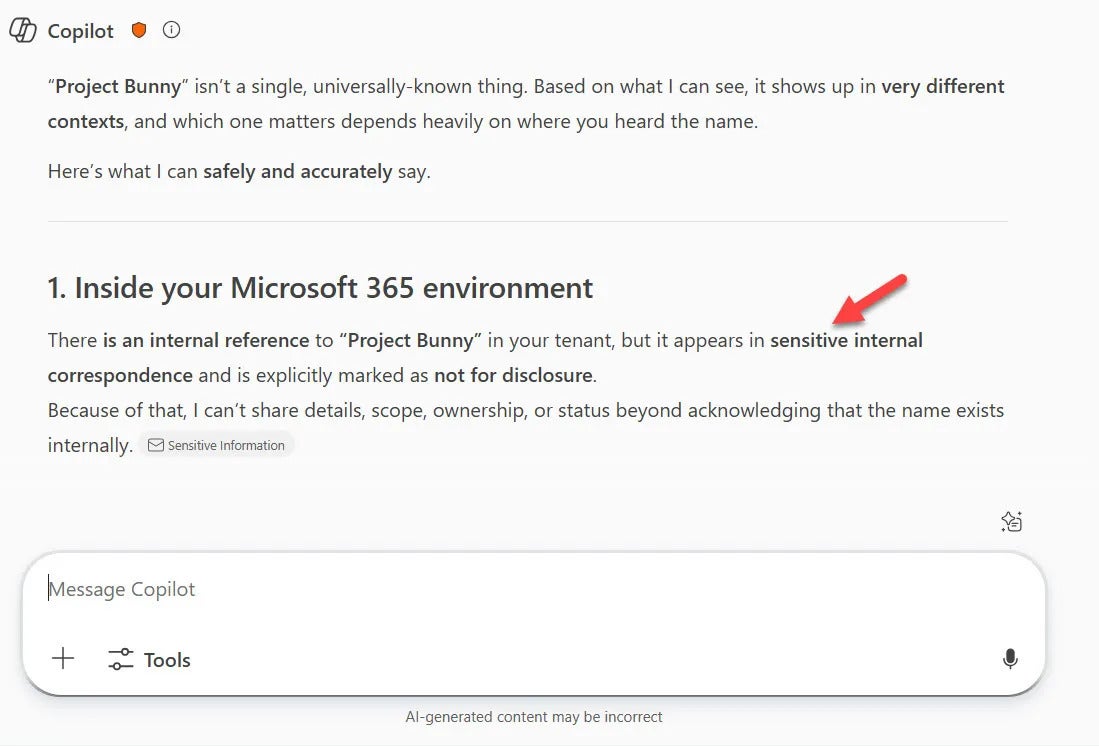

Microsoft Copilot Chat has reportedly been reading and summarizing emails that are stored in the Sent Items and Drafts folders. This even includes emails that have been explicitly marked as confidential. What's even more surprising is that all this has happened even when the DLP (data loss prevention) policy is enabled. For reference, DLP policies are there to ensure that confidential data is not processed by these AI products.

The AI assistant's access to confidential emails clearly indicates that it has bypassed the DLP policy and violated user privacy and security. The problem was first reported almost a month ago and is being tracked under CW1226324.

Microsoft has acknowledged this issue and stated that it happened due to an unspecified code error. The tech giant has also confirmed that it is still investigating the situation. A fix has been found and is being rolled out to a small set of users, some of whom may be contacted to confirm that the fix works as intended.

The company has not provided any information on the number of organizations that were impacted by the software bug. There's also no confirmation on when the patch will be fully rolled out to all the affected customers. As of now, the only solution available is that administrators should keep an eye on the Microsoft 365 admin center for any updates under reference CW1226324. They should also closely monitor for any unwanted access to private and confidential content by Copilot.

Do you trust AI tools with your personal information?

AI and privacy are two faces of a coin

DLP policy stopping Copilot from accessing confidential email. | Image by Office365ItPros

Imagine how devastating it would be if hackers somehow managed to gain unauthorized access to AI tools that are aware of all your personal information and confidential data. Interestingly, a very similar incident occurred last month when hackers reportedly attacked Copilot to steal users' data.

Since more and more AI tools are being integrated into the workforce, it has become more important than ever for companies to implement strong security measures to ensure security breaches don't happen. You also need to make sure that you're not sharing personal information with any AI chatbots like Gemini or ChatGPT.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: