The iPhone has become awful at photography as of late

Once the king, now the iPhone takes photos that seem more fake than real.

Cameras — their sensor sizes, megapixel counts, and lenses — are a huge part of marketing for modern flagship smartphones. The iPhone, which was arguably once the king of mobile photography (if you ignore some Chinese phones), has now become simply awful at that one very important job.

This stems from Apple Intelligence: the incomplete and, in many aspects, relatively inferior suite of AI features provided by Apple. Software enhancement is a very common trick that phone manufacturers use to help their devices take better photos, but Apple Intelligence has done the opposite.

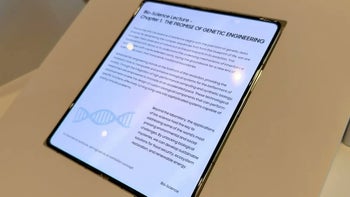

There are now multiple user accounts of Apple Intelligence processing and “enhancing” a photo after it’s been taken, only to turn all the letters into gibberish. Sometimes it works fine, other times it completely spoils a perfectly good image. As one user put it, we are just basically “estimating reality” at this point.

In my opinion, as someone who has used many different AI models for various tasks, this is something that usually occurs on a model that isn’t very powerful. The AI tries to estimate what the image should be showing, but doesn’t possess a comprehensive enough dataset to do that satisfactorily.

If Apple’s AI isn’t able to enhance lettering with a 100 percent success rate, then it should either not carry out this “enhancement” or the user should have an option to disable it. The cameras on modern iPhone models are powerful enough that they don’t need phony processing to improve the pictures they take.

Apple is very aware of its shortcomings when it comes to Artificial Intelligence, and has been making a number of moves to overcome them. The company briefly considered buying Perplexity AI for Apple Intelligence, and has also recently asked Anthropic and OpenAI for help with Siri. Apple may also take a page out of Samsung’s book, and bring Google’s flagship AI model Gemini to iOS.

Compromised photography due to awful post-processing is just yet another example of Apple’s recent bad software updates. Apple is more of a software company than a hardware company, but it’s having a hard time showing it as of late.

In my opinion, as someone who has used many different AI models for various tasks, this is something that usually occurs on a model that isn’t very powerful. The AI tries to estimate what the image should be showing, but doesn’t possess a comprehensive enough dataset to do that satisfactorily.

iPhone 16 has excellent cameras, but not so excellent AI. | Video credit — Apple

Apple is very aware of its shortcomings when it comes to Artificial Intelligence, and has been making a number of moves to overcome them. The company briefly considered buying Perplexity AI for Apple Intelligence, and has also recently asked Anthropic and OpenAI for help with Siri. Apple may also take a page out of Samsung’s book, and bring Google’s flagship AI model Gemini to iOS.

Compromised photography due to awful post-processing is just yet another example of Apple’s recent bad software updates. Apple is more of a software company than a hardware company, but it’s having a hard time showing it as of late.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: