How does the Amazon Fire Phone do its 'Dynamic Perspective' 3D magic?

You need to have at least two cameras looking at your face at all times - the Amazon Fire Phone has four, so two of them are never covered by your fingers.

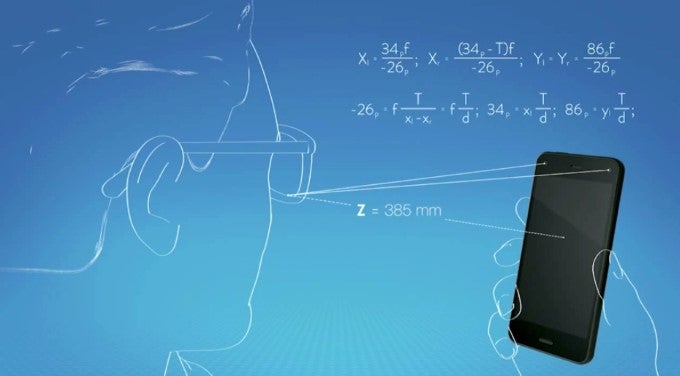

The most notable feature of the new Amazon Fire Phone is something called Dynamic Perspective, a no-glasses 3D-like functionality embedded throughout the phone - from the wallpapers, to the refashioned Carousel interface, to apps and games. But how was Amazon able to pull such a trick?

Jeff Bezos took to the stage yesterday to unveil the new phone, and he also spoke about what challenges the company faced in bringing this to market. In fact, Bezos mentioned that the Fire Phone project started nearly 4 years ago, and the first prototypes were using glasses to recognize someone’s face. Not the best way to do it, is it?

It's a technology 4 years in the making

Going even deeper in the technological details, Amazon also unveiled that it uses global shutter cameras on the front rather than the more traditional rolling shutter ones. Global shutter cameras are faster, much faster, something that allows them to also use less power, as they are fired dozens of times every second. There is, in fact, a 10x difference in efficiency between a rolling shutter and a global shutter camera.Finally, Amazon has opened this new Dynamic Perspective SDK to developers on the day of the event, so if you’re a coder, you can start supporting the new 3D-like functionality in your apps right away - Amazon has made it all really simple.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: