Will AI help us live in the moment? Google's Project Astra and Apple's next-gen Siri are a huge deal!

This article may contain personal views and opinion from the author.

The time where a minority of people complain about the majority for staring at their phones, instead of actually interacting with each other and being present in the moment, might be coming to an end.

Sure, technological progress in thеse last few decades has been pretty rapid. We went from people living with no phones, to people living in their phones, because phones are just so amazing, and addictive.

Millennials and Gen Z can't even imagine life without the entire world's information at their fingertips, and every person they ever knew being immediately reachable.

But as great as phones are, they have a limitation that probably won't be around for much longer – they are physical objects that you have to hold, and stare down at. Their screens are two-dimensional portals that your eyes need to focus on, ignoring what's actually in front of you.

You might not want it to be like that, but you're in a new town and obviously, you need to follow Google Maps directions to find your way around.

Or maybe you're watching a YouTube tutorial on how to build a treehouse, so you're missing a rare bird that just landed on a tree near you. It's just a technological limitation, that we're going to move past. And we're on our way. Here are the hints…

Last week at Google I/O some of us were hoping for a few hardware unveilings, perhaps a Google Pixel Fold 2, but the Android owner spent its entire livestream talking about the AI features its Gemini language model will be bringing to your phone, very soon.

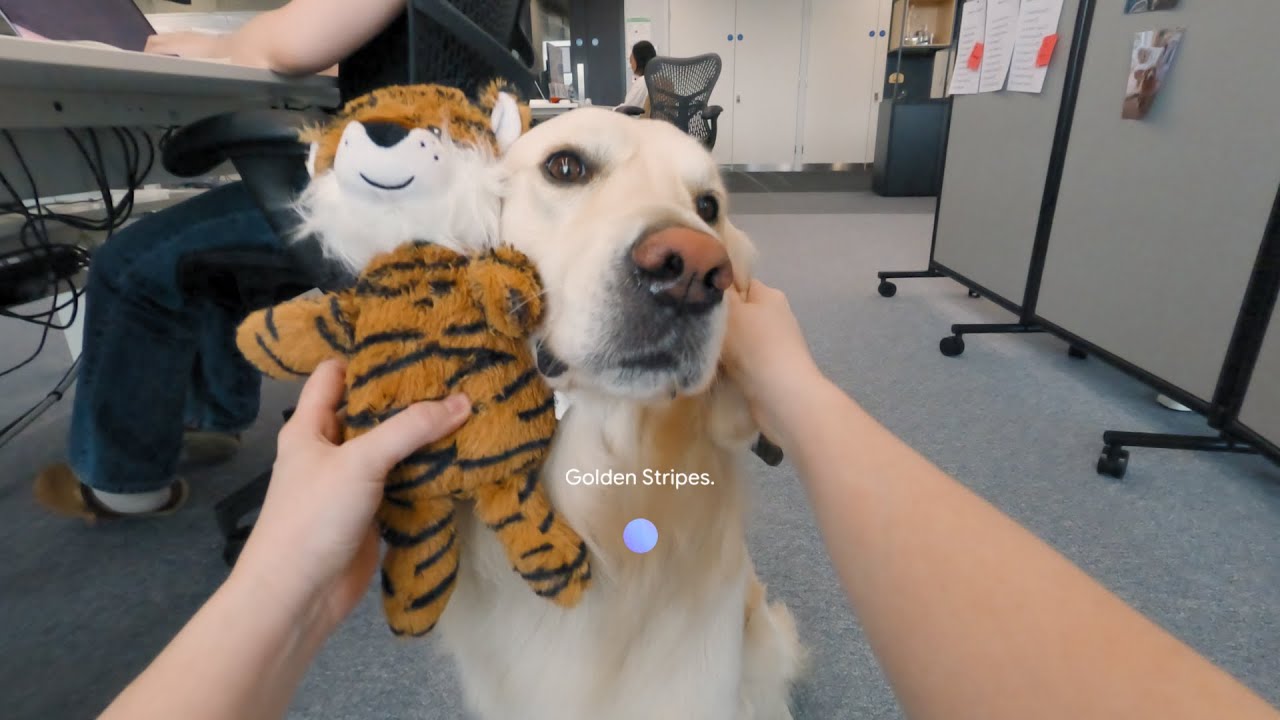

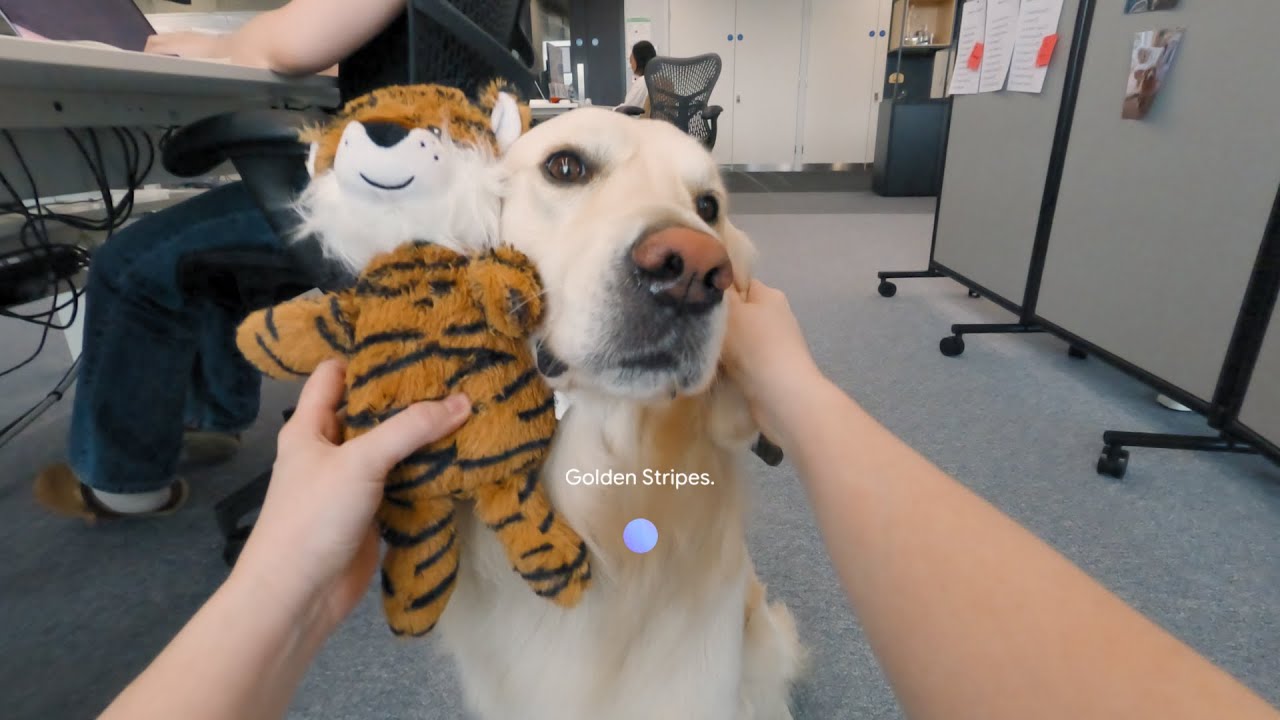

A particular highlight for me, an AR/VR enthusiast, was Google's Project Astra demonstration, which you can watch below. It showcased a scenario where Google's AI is integrated into smart glasses, and can see and understand everything you're seeing.

With such technology you don't actually need to use your phone, it could just quietly do its computational work in your pocket, while a pair of unintrusive glasses paired to it show you the results. A camera in them serves as the AI's portal into your world, instead of the other way around – you having to use your phone as a portal into the digital world.

Once this technology matures and becomes mainstream, and believe me – it will – most of the things you normally do on your phone won't require you to pick it up and stare at it.

You'll just be able to look at the world around you, fully present, maybe more than ever, and get your Maps directions projected on the lenses of your glasses.

Or your search results, or notifications, or whatever else you normally pull a smartphone out of your pocket for.

This technology isn't far away from being a part of your life.

Meta's Ray-Ban smart glasses already come with the Meta AI assistant built-in, that you can talk to, and get the kind of intelligent, conversational AI experience you may have seen from ChatGPT.

Meta's whole selling point for these glasses is exactly, and rightfully so, being able to do everything you'd normally use a phone for, without interrupting your actual life, and instead, remaining present.

They have a camera, but alas – no screen, unlike the many "wearable display" glasses we've reviewed, such as the Xreal Air 2 Pro. Those offer a virtual screen, projected from your smartphone onto the lenses, but no AI.

So, combine those two, and you get… probably what Google tried to show us in that Project Astra demo video. Probably what we'll all be using sooner than we think.

So with all that in mind…

As mentioned earlier, our smartphones are amazing, but due to their slab form factor, they still have a limitation – they take us away from life around us, as we need to hold them, and look at their screen.

As it becomes ever more evident, this limitation won't be around forever, as phones won't stay like this forever. I can see a pretty near future where they're just the computational brick in your pocket, while smart glasses are what (unintrusively) connects you to the digital world, and your AI assistant.

AI assistants like Siri are about to get major updates this year, which will turn them from basic shortcuts for quick tasks, into intelligent, conversational, human-like entities that can see what you see, understand it, help you with any question you can think of, and even generate images and videos for you.

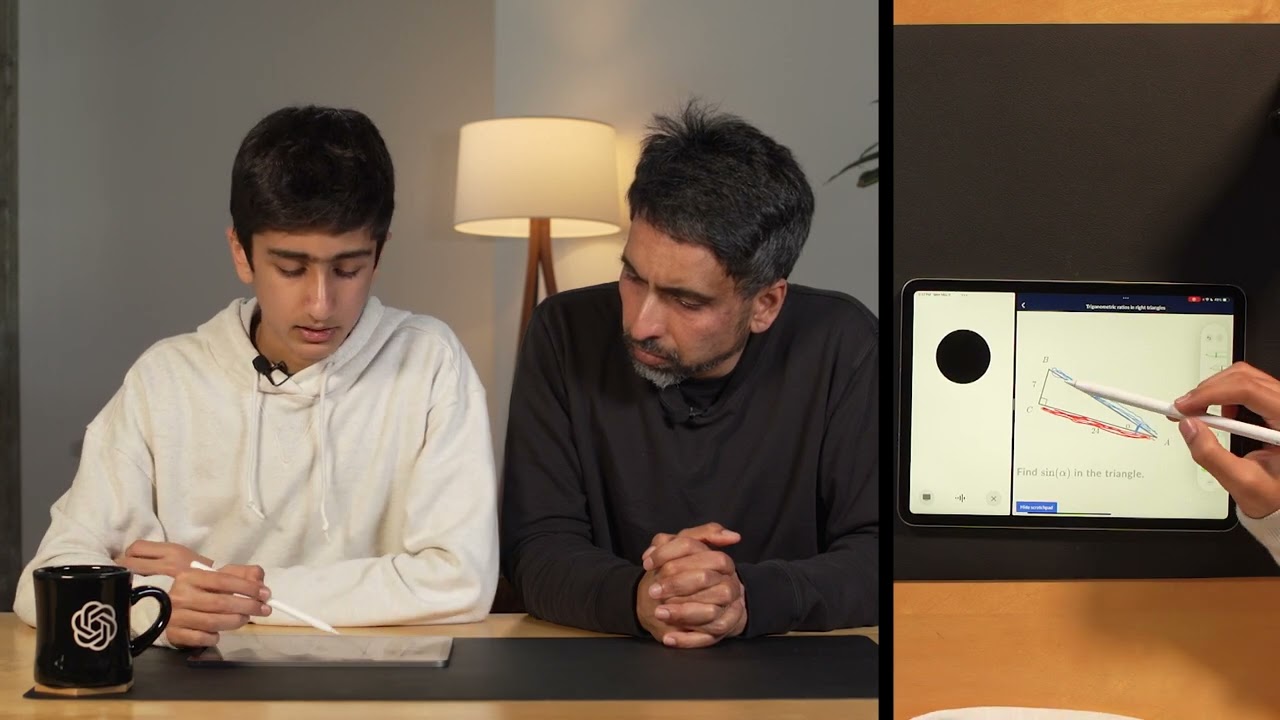

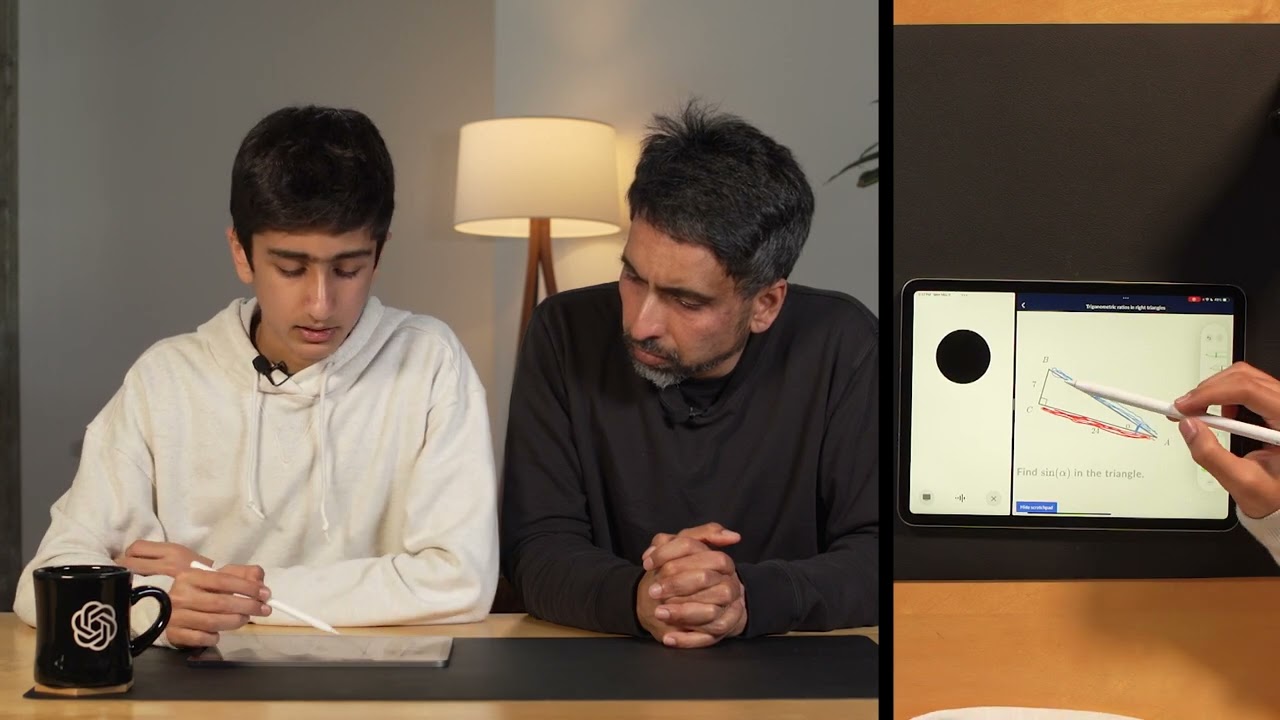

Here's a recent OpenAI GPT-4o demo that blew my mind. It helps a kid do math on their iPad by seeing the math problem, and providing hints towards solving it, without actually spelling out the answer.

That's the kind of attention and individual care most kids can't hope for receiving at school. If I had this technology by my side as a kid, I'd probably know trigonometry pretty well, I told myself.

Now the best part is – this isn't going to be a ChatGPT-only thing. Google, Apple and Meta will all trip over to match and surpass it, and not only that, but to come to our main point – it's not going to stay prisoned inside your iPad and phone either.

Picture a real pen and paper with a math problem on the desk in front of you, and a virtual assistant seeing it through your glasses, and tutoring you, or your child, privately through the speakers in said glasses.

Whatever "living in the moment" means to you – I believe the AI technologies we just looked at will only bring us far closer to it, than we've ever been since the internet.

We'll finally be looking at things in the real world again, instead of pictures on a flat screen, so that alone is a huge win in my books. Even people who are addicted to taking pictures and posting them on social media won't need to constantly pull out their phones for it, but will actually remain present, looking at the person, object, or landscape, and be able to appreciate it more than ever.

In fact, this whole ordeal could cause a ripple effect on how we use social media, and optimistically – fix a lot of problems we've created for ourselves because social media is so addictive. At least the part where everyone always looks down at a phone.

But what do you think about all of this? Excited, or concerned? Share your thoughts with your fellow tech enthusiasts in the comments section below.

Millennials and Gen Z can't even imagine life without the entire world's information at their fingertips, and every person they ever knew being immediately reachable.

You might not want it to be like that, but you're in a new town and obviously, you need to follow Google Maps directions to find your way around.

Or maybe you're watching a YouTube tutorial on how to build a treehouse, so you're missing a rare bird that just landed on a tree near you. It's just a technological limitation, that we're going to move past. And we're on our way. Here are the hints…

Google just spent an entire I/O talking about AI

Last week at Google I/O some of us were hoping for a few hardware unveilings, perhaps a Google Pixel Fold 2, but the Android owner spent its entire livestream talking about the AI features its Gemini language model will be bringing to your phone, very soon.

Google's Project Astra in action

Once this technology matures and becomes mainstream, and believe me – it will – most of the things you normally do on your phone won't require you to pick it up and stare at it.

Or your search results, or notifications, or whatever else you normally pull a smartphone out of your pocket for.

This technology isn't far away from being a part of your life.

Meta's Ray-Ban glasses already do the AI stuff well, and AR glasses already offer a competent screen on your face, that can keep you away from your phone

Meta's Ray-Ban smart glasses already come with the Meta AI assistant built-in, that you can talk to, and get the kind of intelligent, conversational AI experience you may have seen from ChatGPT.

Meta's whole selling point for these glasses is exactly, and rightfully so, being able to do everything you'd normally use a phone for, without interrupting your actual life, and instead, remaining present.

Meta's Ray-Ban smart glasses are a good look into our potential future

So, combine those two, and you get… probably what Google tried to show us in that Project Astra demo video. Probably what we'll all be using sooner than we think.

So with all that in mind…

Google's Gemini and Apple's next-gen Siri will be game changers. What's this AI actually capable of doing, and how is it going to help everyone "live in the moment"?

As mentioned earlier, our smartphones are amazing, but due to their slab form factor, they still have a limitation – they take us away from life around us, as we need to hold them, and look at their screen.

AI assistants like Siri are about to get major updates this year, which will turn them from basic shortcuts for quick tasks, into intelligent, conversational, human-like entities that can see what you see, understand it, help you with any question you can think of, and even generate images and videos for you.

Here's a recent OpenAI GPT-4o demo that blew my mind. It helps a kid do math on their iPad by seeing the math problem, and providing hints towards solving it, without actually spelling out the answer.

A very impressive GPT-4o demonstration

Now the best part is – this isn't going to be a ChatGPT-only thing. Google, Apple and Meta will all trip over to match and surpass it, and not only that, but to come to our main point – it's not going to stay prisoned inside your iPad and phone either.

Picture a real pen and paper with a math problem on the desk in front of you, and a virtual assistant seeing it through your glasses, and tutoring you, or your child, privately through the speakers in said glasses.

We'll finally be looking at things in the real world again, instead of pictures on a flat screen, so that alone is a huge win in my books. Even people who are addicted to taking pictures and posting them on social media won't need to constantly pull out their phones for it, but will actually remain present, looking at the person, object, or landscape, and be able to appreciate it more than ever.

In fact, this whole ordeal could cause a ripple effect on how we use social media, and optimistically – fix a lot of problems we've created for ourselves because social media is so addictive. At least the part where everyone always looks down at a phone.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: