The Pixelworks interview: how OnePlus took a Samsung display and made it better

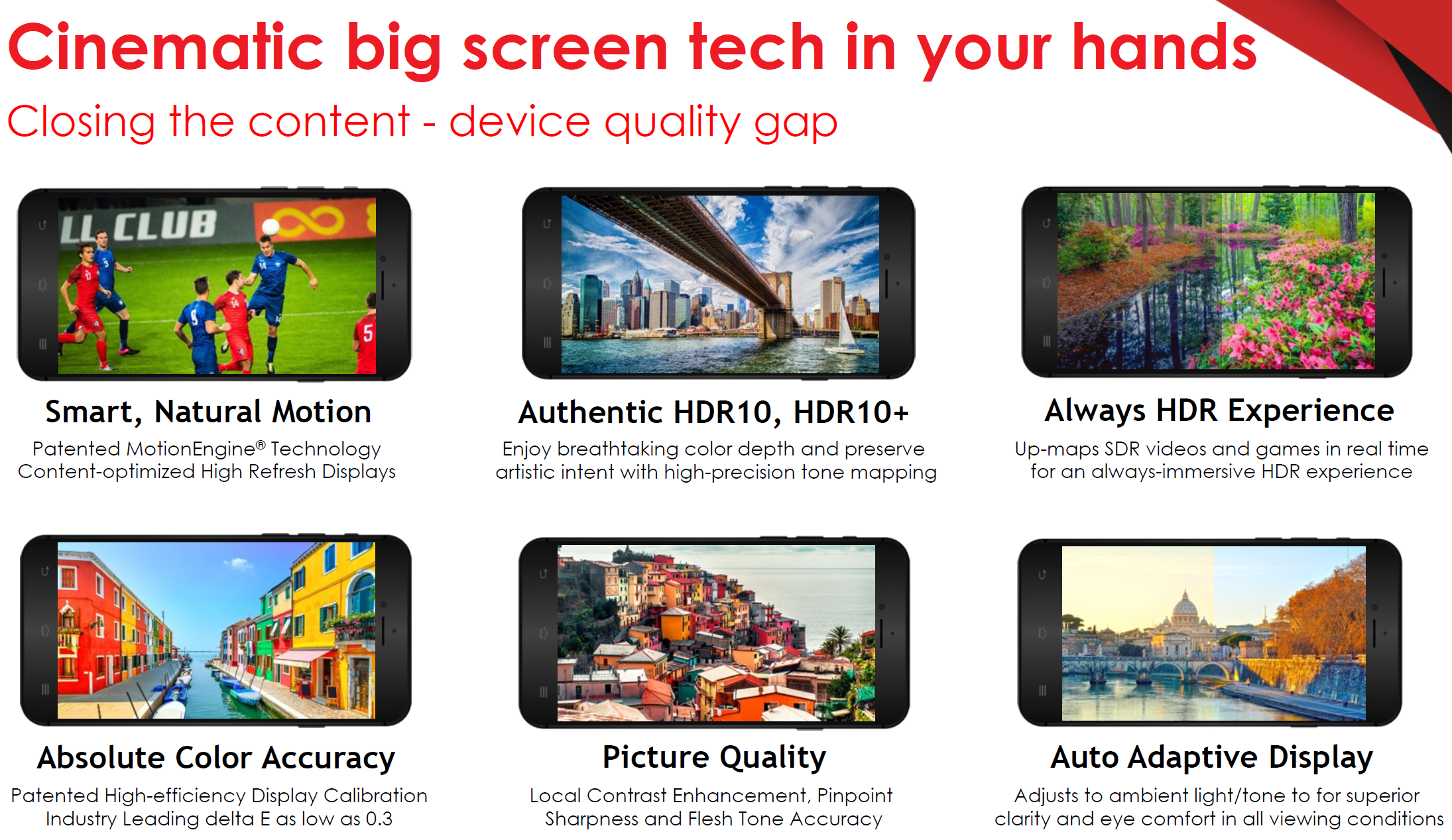

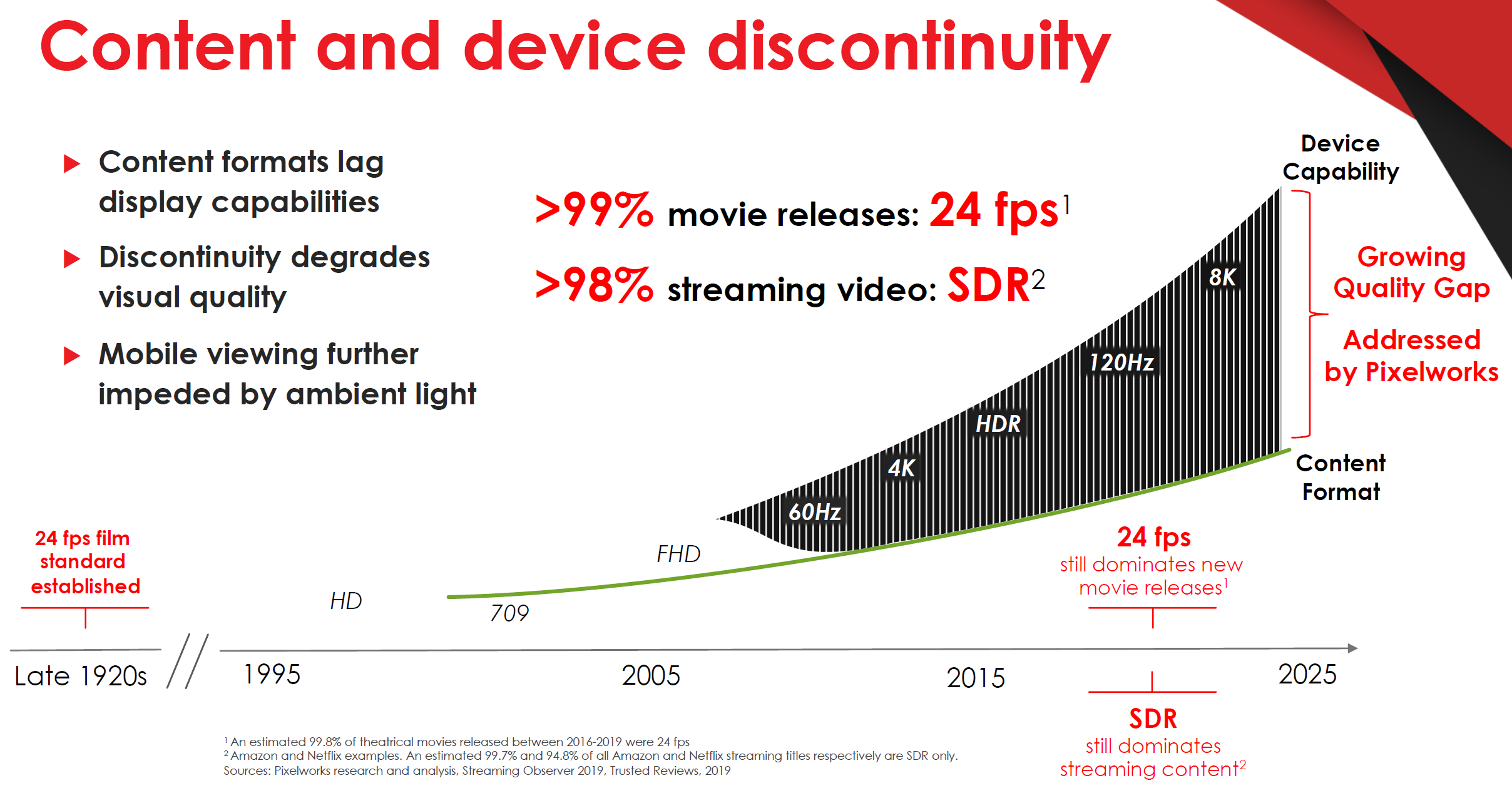

What's behind Android spring chickens like the OnePlus 8 Pro getting iPhone-level screen calibration and beating Samsung at its flagship display game, getting more features out of the same panel?

A good ol' American/Silicon Valley innovator striving for imaging perfection called Pixelworks. We recently sat down for an interview with their CEO, Todd DeBonis, Sr. Director of Technical Marketing, Vikas Dhurka, and Vice President of Corporate Marketing, Peter Carson.

The secret is out. The magic behind the industry’s most exceptional display — on the OnePlus 8 Pro — comes in a little chip called the Pixelworks visual processor. @pixelworksinc #smartphones #technology https://t.co/Op2F8Zunz5

— Peter Carson (@PCarson123) June 13, 2020

Pixelworks, a good ol' Silicon Valley success story

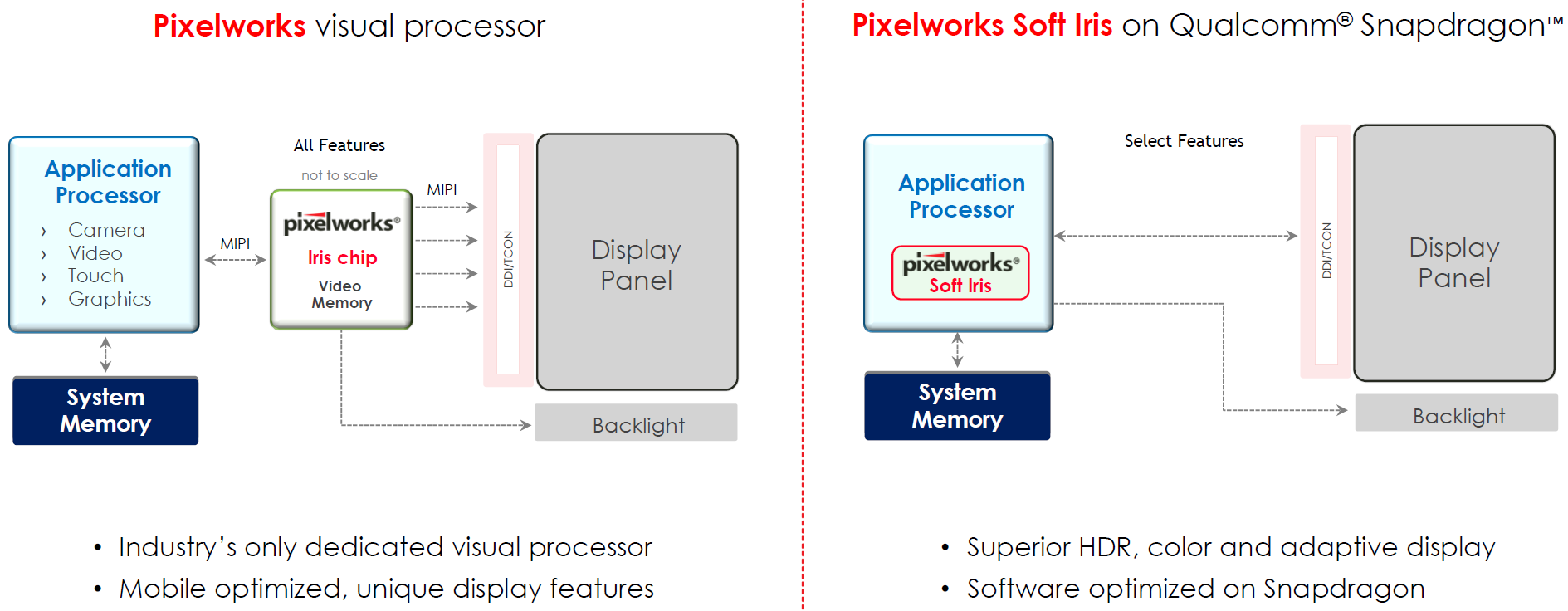

Under CEO Todd DeBonis, Pixelworks recently entered a strategic partnership with Qualcomm to integrate its Soft Iris display processing and calibration solution for customers of its flagship 8-series mobile chipsets, starting with the Snapdragon 855. This would somewhat explain the uncharacteristically rich array of new display and image processing features that Qualcomm bragged about when announcing the 855 and 865 mobile processor generations.

The result? Phones like the OnePlus 8 Pro that take a Samsung panel and the Pixelworks Iris 5 chip and software inside to make it not only color-credible but with more features (like dynamic refresh at full resolution or variable video frame rates) than what Samsung offers on its own, more expensive phones in the latest flagship S20 series.

Moreover, third-party display measurements like our review or Displaymate's benchmarks, also notice excellent color chart cover across all gamuts, and ideal delta and white balance numbers in line with or better than what Apple or Samsung are achieving. What gives?

The Pixelworks interview, or how mobile displays and streaming content got a picture-perfect marriage

This interview with the Pixelworks team about their pivot to mobile - a transformation from which they are reaping the benefits now - has been slightly edited for length and clarity.

PhoneArena: Could you elaborate a bit further on the individual post-production display calibration that Pete Lau from OnePlus said the 8 Pro panels had undergone, and which seems like a requirement for using the Iris 5 technology? How long does it take, how much does it add to the cost, etc.?

Sr. Director Vikas Dhurka: Now, it's actually a differentiator, [not a requirement]. OEMs in the past were choosing not to do that for cost reasons, specifically for costs associated with factory test time. Our process is patented, and we can deliver calibration across all color gamuts in a single process without going back to recheck. This process only takes about 26 seconds [per phone]...which is a fraction of the time required by other approaches.

VP Peter Carson: How we perform calibration is actually very flexible. We can calibrate at the display factory level, or at the phone level. Our customers are choosing to do it at the phone level, which affords them some significant color accuracy advantages because it compensates for certain characteristics of a phone that can affect color accuracy, such as power supply and thermals.

PhoneArena: What goes into the Iris 5 chip on phones like the OnePlus 8 Pro, and how does it add to the final cost of the display package?

Pixelworks CEO Todd DeBonis: It's a six to eight months close collaboration with their [OnePlus] software development team, display system engineers, the display vendor, and in many cases, the AP [application processor] vendor. We effectively insert our visual processor between the AP and the display controller using the MIPI interfaces.

We have become almost like an outsourced display system engineering partner. With the 8 Pro, we started working through all the bugs... You go through hundreds before you bring a phone to production today. We are also so integrated [that] we are usually the first reviewer of all these bugs, so we help the team assign and work through them. Some [of the bugs] go back to the AP vendor, some to the display vendor, some are Android related, and some go back to the phone manufacturer who is configuring what you call a “skin,” in their version of Android.

VP Peter Carson: The Iris chip is actually a tiny fraction of the display system cost. Where we got our traction initially was in the price sensitive mid-tier. The reason was that recent entrants like HMD, TCL and others, found that they could differentiate with premium features like HDR without having to step up to pricier 700- or 800- tier apps processors. And with our Iris 3 chip it’s an always-on HDR experience, so you get that great color depth and contrast even when viewing SDR content… in addition to best-in-class color accuracy and other enhancements. The AP is not the only place for potential savings. There can be as much as a $10 cost range within each class of display. The Pixelworks chip is a small fraction of that range, so the overall impact is at least cost neutral or a net cost reduction… when considering display panel or apps processor cost savings.

PhoneArena: Can you comment on the virtues of having a hardware dynamic refresh rate, MEMC, and display HDR component, compared to a predominantly software solution like on, say, the Galaxy S20 series?

VP Peter Carson: Our customers now have display leadership. This is one point I really want to emphasize. We anticipated the high refresh rate trend very early on and optimized our Iris 5 solution for it. Our customers are taking advantage of that now... That's why we have an architecture that dynamically maps specific feature sets to specific apps and use cases. And at the same time the system also dynamically switches between Iris hardware and software, depending on the use case.

The advantage of our implementation in the OnePlus 8 Pro or the OPPO Find X2 is that we have certain core features that can run efficiently in software on the apps processor, such as calibration for color accuracy, automatic screen brightness control and tone adaptation to ambient light. Our chip has a superset of features including these and more complex functions like motion processing, SDR-to-HDR conversion, sharpness and [video] frame processing, that need to be done in hardware for performance and power efficiency. We have very specialized chips with a lot of custom IP, optimized to be the most power-, performance- and cost-efficient of any implementation.

You just can't get those efficiencies running software on a DSP or a GPU for some of these functions. Our combination of hardware and software gives the system flexibility and delivers a consistent user experience, since the software features running on Snapdragon also run on the Iris chip. For static use cases like web browsing, social media, or reading, the system can revert to software features only and save power because they don’t require the kind of processing you need for dynamic cases like video and gaming.

For example, you're playing a video or game at 24 or 30 frames a second, and you are upscaling to 60 to match the display… we can do that with lower total system power than running natively at 60 on the apps processor, [but] we are running additional enhancements as well. Bottom line, the processor improves display quality with only a negligible impact on total system power.

Watch this 90-second explainer video to learn how MEMC works, what problems it solves and the unique benefits behind the latest advancements in the technology for mobile. #smartphones #mobilephones #display #video @PCarson123 @dhurka pic.twitter.com/E8KfTfhT7J

— Pixelworks, Inc. (@pixelworksinc) May 8, 2020

We have done multiple levels of optimization with OnePlus to improve display performance across all scenarios including dynamic refresh rate switching, which is not supported in the Galaxy S20, nor is the combination of 120 Hz and WQHD resolution. Both are unique advantages in the OnePlus 8 Pro.

PhoneArena: Will dynamic 120Hz, MEMC and the other unique features be possible with foldable displays like on the Galaxy Fold or the Huawei Mate Xs, too?

Sr. Director Vikas Dhurka: From a capability point of view, the technologies that we provide are agnostic to the screen size and the mechanical design of the display. With the Pixelworks processor, any display could have a visual experience that is far superior than anything else that's out there.

PhoneArena: We see that you mention a collaboration with Qualcomm on Snapdragon chipsets for the software Iris solution, can you tell us a bit more about it? The reason for asking is, again, Samsung’s software refresh rate management solution which seems to be much worse of a battery hog on its Exynos models than on the Snapdragon ones, and your Soft Iris implementation might have something to do with that.

VP Peter Carson: We actually worked with them [Qualcomm] as an ISV [independent software vendor] on the implementation of our software, specifically to optimize how it runs on premium-tier Snapdragon chips like the 855 and 865. I can’t speak to the Exynos implementation, but the ability for our customers’ devices to dynamically switch between our software features running on Snapdragon and our highly optimized silicon-based features is perhaps a reason they are able to stretch the envelope of both performance and power efficiency more than others. We're still evaluating whether to bring our software solution to the Snapdragon 700 tier, but not all APs can run our software.. It needs to have the appropriate display pipeline hardware; when we take our software to the next tier, we expect to continue working with Qualcomm.

VP Peter Carson: The big AP vendors perhaps do more to define how Android behaves in most phones [than Google]. It may be helpful, building consumer awareness for the benefits of specific refresh rates by app. But honestly, most consumers don't want to be burdened with trying to figure that out unless they're tech savvy consumers or avid gamers. And if you look at the approach that OnePlus and OPPO take, they have those toggles kind of buried in their system settings as lab features for people who really want to play with the display settings.

I think smartphone displays will get more dynamic if you're following what's happening in the next round of flagship 120 hertz adaptive frame rate panels - things are going to become more adaptive, not less. So, the whole idea of manually switching and selecting modes, I think, could become outdated.

PhoneArena: You currently appear to have a first mover advantage over any other display processing solution in the mobile realm. Even Samsung and Huawei can’t replicate what you guys did for OnePlus or OPPO phone displays – things like MEMC, dynamic refresh, adaptive colors, etc. Do you envision keeping this advantage next year as well, being a sort of a boutique shop for the best mobile imaging solutions?

The know-how we've garnered over those six [Iris] generations, we've made mistakes and then fixed them. With that learning experience, anybody just coming into the market and trying to compete against this, even if they had a lot more money, can’t catch us on the learning curve.

So, if we let up on the level of intensity, if you take the foot off the development pedal, then we could let them catch up. I'm not doing that for the mobile business. We're trying to improve that experience, you can do it by just focusing on all the display capability. But if you really want to improve it, you need to start collaborating with the individual content providers...

Going to high dynamic range and high contrast, if you have to move to a higher frame rate, the judder and blur become so apparent at a brighter, higher dynamic range, it becomes an uncomfortable experience. So, the major studios, because they haven't figured out how to deliver the technology in a format where the experience would meet the discerning eye of a James Cameron of a Christopher Nolan, the studios have basically decided they will not deliver HDR content to the theaters, and very limited [HDR] content will be delivered to the streaming services. Netflix has said everybody has to produce their original content in 4K HDR format, so if any director signs on, they will have to do develop in 4K HDR. For this exact reason, some directors won't work with Netflix.

Motion was not why 24 fps became the standard (late 1920's). It was (1) synchronized sound recording (24 fps was min. rate for good sound) & (2) motorized projectors (eliminated varying frame rate from hand cranking). Today 24 fps is all about motion. See: https://t.co/nxJs3DigCg https://t.co/tyH4wCkzTS

— Peter Carson (@PCarson123) May 16, 2020

Do you have other question for the Pixelworks team? Shoot them out in the comments below, and we'll relay them to the imaging quality experts...

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: