Navigate iPhone with your eyes, end motion sickness and more with new iOS 18 accessibility features

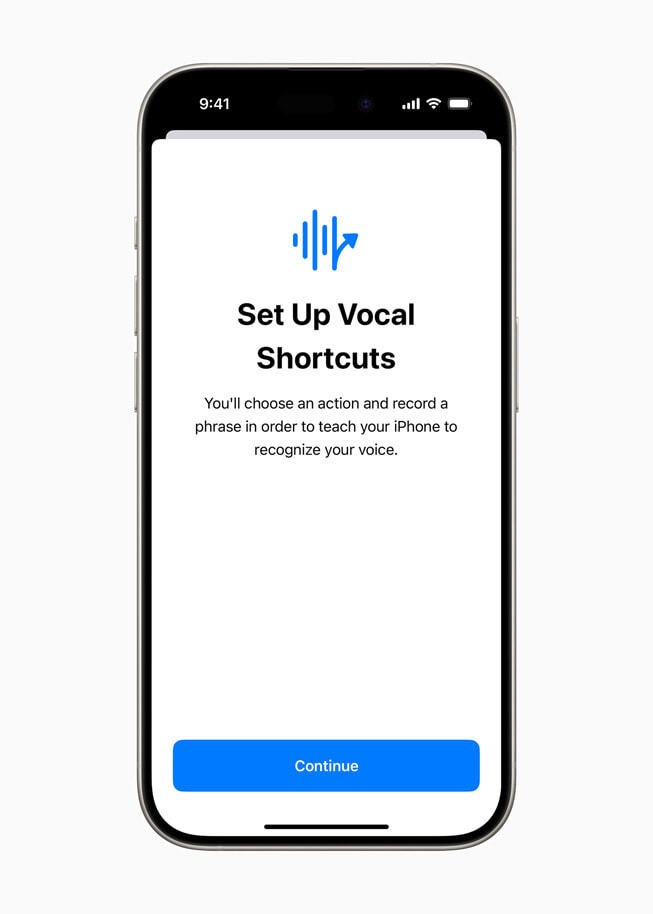

Today, Apple announced some new accessibility features including one for Siri called Vocal Shortcuts. With this feature, iPhone and iPad users will be able to assign a custom phrase that can be used to trigger the digital assistant into launching a shortcut and handle complex tasks. For example, you can set up Siri to turn on low power mode by saying "I'm running out of juice."

Another new accessibility feature called "Listen for Atypical Speech" uses on-device machine learning to recognize the speech patterns of iPhone users. Those with cerebral palsy, amyotrophic lateral sclerosis (ALS), or stroke who cannot talk clearly will use the feature to help them customize and control their iPhone through their speech.

New accessibility features such as Eye Tracking help iPhone users with speech issues

Mark Hasegawa-Johnson, with the Speech Accessibility Project at the Beckman Institute for Advanced Science and Technology at the University of Illinois Urbana-Champaign, says, "Artificial intelligence has the potential to improve speech recognition for millions of people with atypical speech, so we are thrilled that Apple is bringing these new accessibility features to consumers."

With Vocal Shortcuts, users can set a custom phrase to trigger a specific Shortcut

The iPhone and iPad will be adding Eye Tracking which uses AI to help device owners navigate their phone and tablet using their eyes. Using the front-facing camera, Eye Tracking is set up and calibrated in seconds and all of the machine learning required for this tool is kept on-device and is not shared with Apple. No additional hardware or accessories are needed and the feature works across iOS and iPadOS apps. Users will be able to employ their eyes to press physical buttons, swipe the screen, and make other gestures.

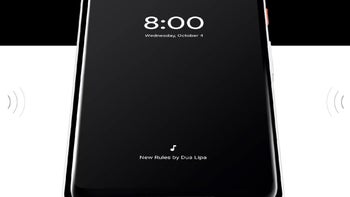

For those with hearing difficulties, the new Music Haptics accessibility feature will allow the Taptic Engine on an iPhone to "play taps, textures, and refined vibrations to the audio of the music." Not only does this feature work with millions of songs in Apple Music's inventory, but Apple will make Music Haptics available as an API which will allow developers to include the feature in their music-related apps.

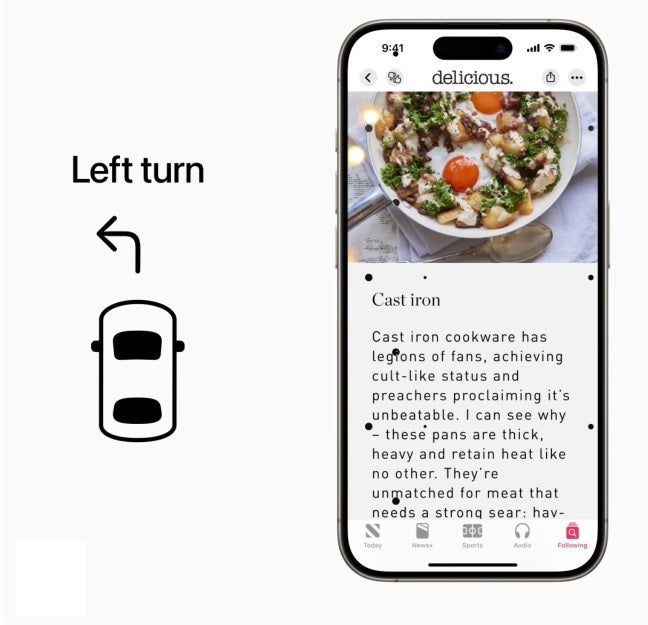

Another accessibility feature might stop a passenger from getting 'car sick'

Those who are prone to experience motion sickness while riding in a car can turn to Apple's Vehicle Motion Cues. The tech giant notes that research indicates that motion sickness is the result of a difference between what a person feels and what he sees. With this feature enabled, small animated dots on the edge of the iPhone screen represent changes in the motion of the vehicle which reduces the sensory conflict that causes the motion sickness. The feature can be set to appear automatically on the iPhone or it can be turned on and off in the Control Center.

Vehicle Motion Cues can stop a passenger in a vehicle from getting motion sickness

With this feature enabled, the dots on the screen will move right while the car turns left. The dots will move right when the car turns left. When the car accelerates, the dots move down to the bottom of the screen, and when the car brakes, the dots move up toward the top of the display. The goal is to even out what the user is feeling from the movement of the car with what he sees the dots doing on the screen,

To get to the Accessibility app, you need to tap on Settings first. The new features will be available later this year.

Apple CEO Tim Cook says, "We believe deeply in the transformative power of innovation to enrich lives. That’s why for nearly 40 years, Apple has championed inclusive design by embedding accessibility at the core of our hardware and software. We're continuously pushing the boundaries of technology, and these new features reflect our long-standing commitment to delivering the best possible experience to all of our users."

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: