Google Pixel 2/XL Portrait Mode is unlike any other: here is how it works

With just one camera and AI, the Pixel 2 does Portrait Mode unlike any other phone

The Google Pixel 2/XL looks just like any other smartphone out there, but hidden inside is something powerful and innovative: artificial intelligence (AI).

Google publicly stated that it is moving from a mobile-first to an AI-first company last year, but this year it really doubled down on AI, machine learning and neural networks, and the best result of its effort are summed up in the Pixe 2/XL camera.

First, let's state the obvious: the Pixel 2/XL - unlike many other phones - does not have two cameras on its back. However, it does have Portrait Mode. How is that possible?

Clever engineering and the power of AI come together to make this possible, and it's time to explain exactly how. But first, let's take a look at the...

Google Pixel 2 camera specs:

| Main camera | Google Pixel 2/XL | Google Pixel/XL |

|---|---|---|

| Resolution | 12 MP | 12 MP |

| Lens | f/1.8 | f/2.0 |

| Sensor size | 1/2.6-inch | 1/2.3-inch |

| Pixel size | 1.4 μm | 1.55 μm |

| OIS | Yes | No |

Interestingly, while Google has improved the camera quality on the new Pixel 2/XL, it has done so while reducing the actual sensor size. The new Pixel 2/XL has a smaller, 1/2.6-inch sensor, while the original Pixel has a 1/2.3-inch sensor. Interestingly, the original Pixel with its larger camera did not have any camera bump, while the new Pixel - despite the smaller camera size - actually has one (this probably has something to do with the addition of optical image stabilization that takes extra space). Another welcome upgrade is in aperture: it's now wider, so more light can get through.

Portrait Mode explained

The same picture without (left) and with (right) Portrait Mode

There are three key things that happen on the Pixel 2/XL to enable portrait mode with just one camera: HDR+, a segmentation mask and a depth map.

HDR+, the first step

And it all starts with HDR+. What is it? HDR alone stands for "high-dynamic range" and has been on phones for years. It works by taking multiple pictures with different exposures - a darker one, a brighter one - and combining them into one picture where the detail in both the dark and lighter areas is well preserved. HDR+ on the Pixel 2 is a whole different animal. It takes up to 10 photographs, way more than others, and does this everytime. Those pictures are underexposed to avoid getting too bright (burned) highlights, and then are all combined and aligned in one picture. Here is what the results looks like:

HDR+ off (left), HDR+ on (right)

2. The power of AI

Secondly, using the HDR+ picture, the phone has to decide which part of the image should be in focus and which one should be blurred. This is where machine learning comes to help: Google has trained a neural network to recognize people, pets, flowers and the like. Google uses a convolutional neural network and this alone is worth a separate look, but let's just say that the neural network filters the image by weighing the sum of the neighbors around each pixel. It first starts by figuring out color and edges, then continues to filter until it finds faces, then facial features, and so on. The network was trained on nearly a million pictures of people (some with hats, others with sunglasses, etc). The result of this filtering is essential to the quality of the image and that is the key advantage of the Pixel 2 camera: it produces some amazing results. The result is the so called "segmentation mask" and here is how it looks like, the result is already very good.

3. Innovative use of Dual Pixels

There are a few problems with the image produced after the segmentation mask: first and foremost, the applied blur is uniform throughout the image. The actual scene, however, contains objects at a different distance from the camera, and if you had used a real camera, they would have been blurred to a different degree. The tiny pastry in the front would also have been blurred by a real camera as it is too close to it.

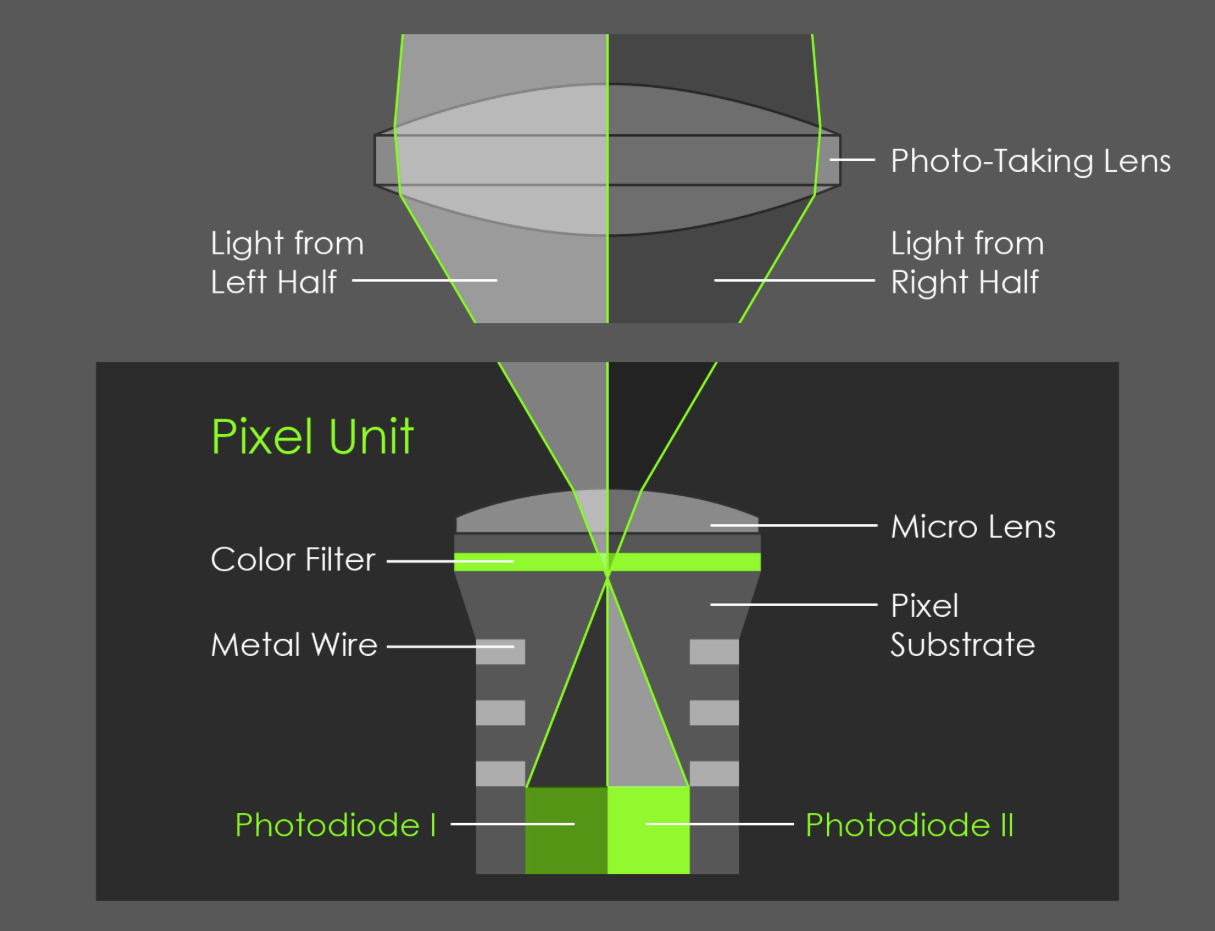

Google needs more information to create proper blur and this is where we speak about the "Depth map". The Pixel 2 has a dual pixel camera sensor. This is not new: Samsung phones were the first to have it. What it boils down to is that on the camera sensor, each pixel is actually divided in two small sub-pixels (it's called Dual Pixel Auto Focus, or DPAF) that capture the same information from a very, very slightly different angle. This difference was used in the Galaxy S7 and later top tier Samsung phones for their ultra-fast auto-focusing. Google has found another use for that: it uses this very slight difference to determine how far away each object in the frame is from the camera.

The final result

Here is the result, which is also quite amazing: the brighter parts are closer, while the darker parts are further away:

Or as Google fondly puts it:

"Specifically, we use our left-side and right-side images (or top and bottom) as input to a stereo algorithm similar to that used in Google's Jump system panorama stitcher (called the Jump Assembler). This algorithm first performs subpixel-accurate tile-based alignment to produce a low-resolution depth map, then interpolates it to high resolution using a bilateral solver."

If it sounds complicated it is because it really is, some impressive engineering work went into perfecting this and Google even additionally filters out noise in an additional burst of images to get more proper results. And this is the depth mask.

Final result, after HDR+, segmentation mask and depth map

Finally, all of these steps are combined in one single image that looks stunning. Notice how the amount of blur is correct and even the pastry up front is blurred, notice every single piece of hair properly recognized and in sharp focus. Portrait mode on the Pixel 2 works on people with frizzy hair (get that Apple?), on people holding ice cream cones, people with glasses and hats, people holding bouquets and in macro photography.

For the front camera, Google cannot use the last step: the front camera lacks a dual pixel sensor, so it only uses the first two steps, but the results also look much better than the competition that completely lacks such a feature.

Finally, here are some tips Google shares to make the most out of portrait mode and some Portrait images shot on the Pixel 2/XL:

- Stand close enough to your subjects that their head (or head and shoulders) fill the frame.

- For a group shot where you want everyone sharp, place them at the same distance from the camera.

- For a more pleasing blur, put some distance between your subjects and the background.

- Remove dark sunglasses, floppy hats, giant scarves, and crocodiles.

- For macro shots, tap to focus to ensure that the object you care about stays sharp.

source: Google Research

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: