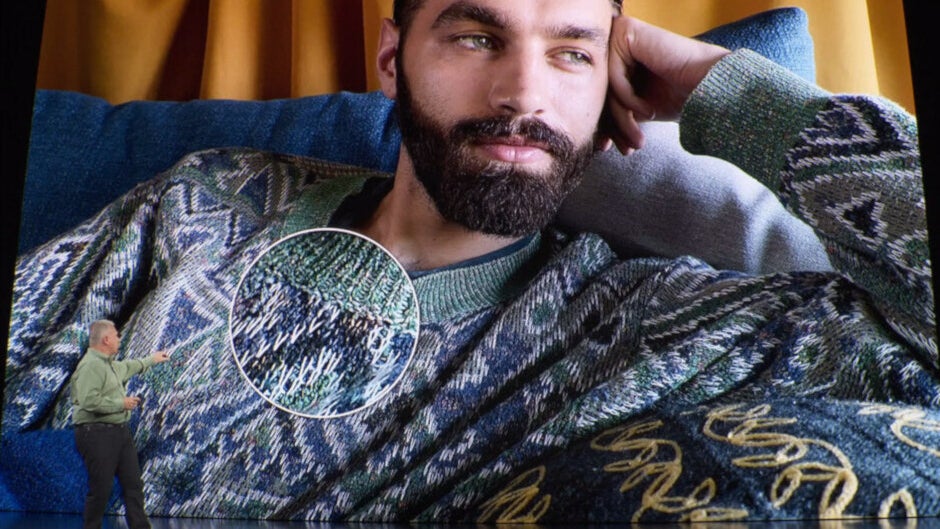

A feature coming with iOS 13.2 will take photography on the 2019 Apple iPhones to a new level

If you bought one of the new 2019 iPhone models and have been impressed with the photos you've been taking with the device, you have not seen what the handset is fully capable of. That's because Apple has yet to push out the promised software update that adds the AI-based Deep Fusion technology to the phones. Apple introduced this back on September 10th at the new products event that outed the iPhone 11, iPhone 11 Pro and iPhone 11 Pro Max.

With Deep Fusion, four frames are captured using a fast shutter speed, and four are shot at a standard speed all before you press the shutter button. When the shutter is clicked, a long-exposure image is taken to capture details. Three regular shots and a long-exposure shot are merged together into one picture which is merged again with the short-exposure image with the best detail. This is all done using AI. In a split second, the image is optimized pixel by pixel to generate a final picture with great detail and low noise.

Deep Fusion has three different modes based on the ambient lighting and the lens being employed. The primary Wide-angle camera will use Deep Fusion for photos in medium light to low light with Night Mode automatically turning on for anything darker. The telephoto camera works at extremes; in very bright light Deep Fusion will get the nod while Night Mode takes over in very dark conditions. And with the Ultra-wide camera, neither Deep Fusion or Night Mode is supported.

Deep Fusion is expected to come with iOS 13.2 and will make photos more detailed with less noise

The good news for those with a 2019 iPhone is that Deep Fusion will be available soon once iOS 13.2 is released to the public. The developer beta version of the build was expected to have been released today, but the status now is "coming" according to a tweet from TechCrunch's Matthew Panzarino (@panzer). If you are a member of the Apple Beta Software Program, you should have the opportunity to try out Deep Fusion pretty soon. But remember, since it won't be a final build you will have to weigh the risk of using unstable software versus the wait required to load the final version of the build. If you've never loaded an iOS beta version on your iPhone before, you are probably best served by waiting for the stable build to be released.

The camera wars are heating up after the release of the Apple iPhone 11 family. Two weeks from today, October 15th, Google will introduce the new Pixel 4 series. While Apple this year has embraced computational photography, this is something that the Pixels have been known for. Considered one of the best smartphones for photography (if not the best), Google has flexed its processing muscles over the years while equipping the Pixels with a single 12.2MP camera on the back of the phones. Times have really changed when it seems weird that a phone has only a single camera adorning the rear panel.

The triple-camera setup on the back of the Apple iPhone 11 Pro

Meanwhile, the new Pixels will once again feature a 12.2MP primary camera, although this time the aperture is a little wider at f/1.6. This will allow for more light to be captured when a photo is being taken. For the first time, there will be a second sensor on the back; this will be a 16MP telephoto camera with a 5x optical zoom. And while Apple has been praised for its Night Mode, which allows users to snap viewable photos in low-light and dark conditions, Google is expected to respond with its second-generation version of Night Sight. The latter could add longer exposure times to Night Sight allowing Pixel 4 users to engage in Astrophotography. This would allow the phones to be used to shoot stunning (we assume) photos of the night sky including stars.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: