Google just dropped a ton of updates for those who want to make apps for Android and the Play Store

The new tools are designed to lower development costs and maximize results.

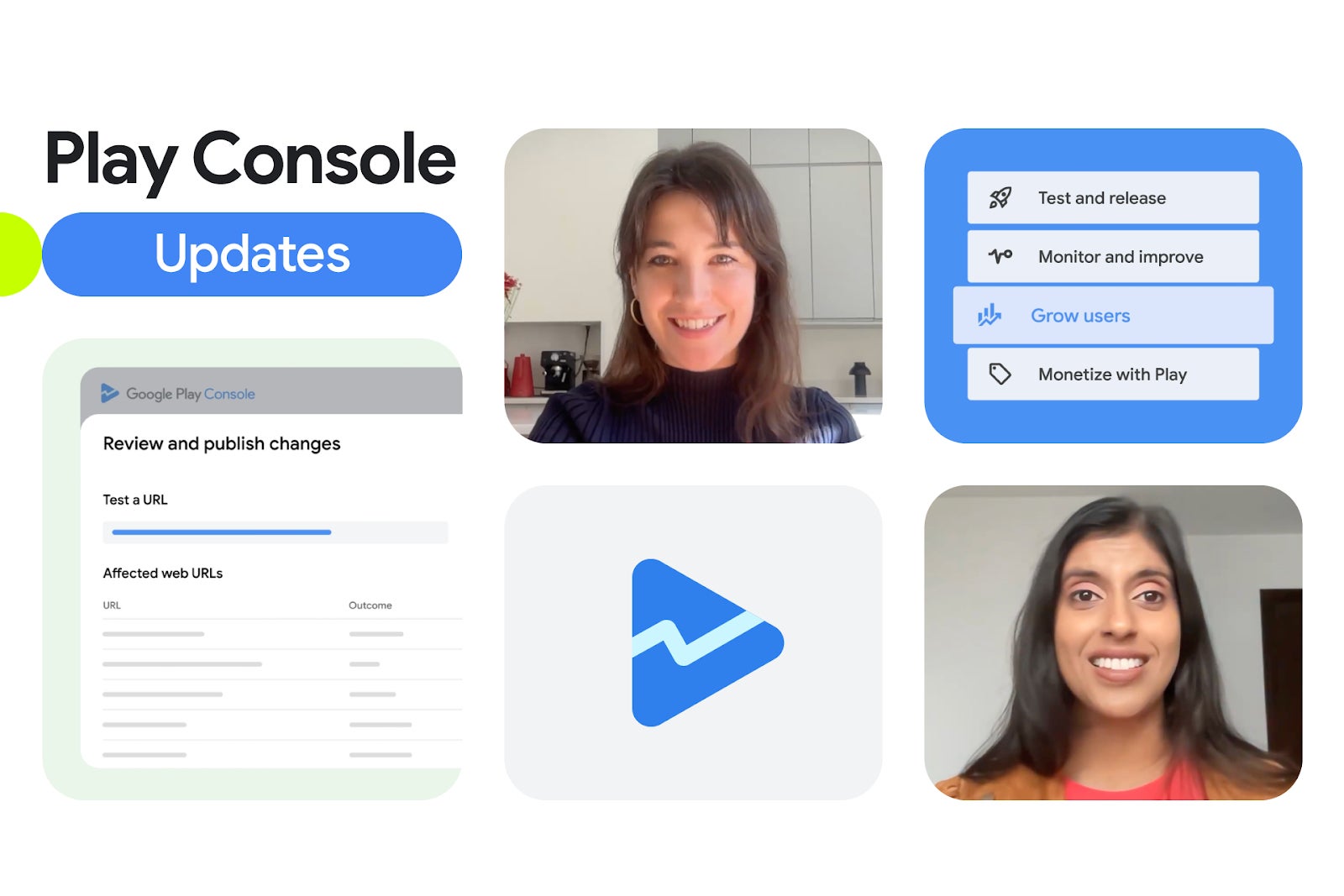

Google is rolling out a massive suite of AI features for Android and Google Play developers, highlighted in the latest edition of The Android Show. The goal is to make it simpler to build apps and more effective to grow them into sustainable businesses.

What's new with Google's AI tools for developers

You can use the 'Play Console' to validate deep links and other tasks. | Image credit — Google

Google just announced a bundle of new AI-powered tools aimed at both sides of the app equation: building and growing. It's all about lowering development costs and maximizing your results on the Play Store.

On the Google Play side, two things stand out. First, they're integrating Gemini to offer high-quality, free translations for your app's text (what devs call "strings"). This is huge for helping developers reach global markets without a big budget. Second, the Statistics page is getting Gemini-powered chart summaries. Instead of you trying to interpret a random spike, an AI will automatically describe key trends and events affecting your metrics.

For the coders, Android Studio is getting much smarter. New "Agentic capabilities" mean you can have the AI do complex tasks for you, like upgrading your project's APIs. You'll also be able to bring your own large language model (LLM) to power the AI inside the studio. And for in-app features, Google is opening up the on-device Gemini Nano model through a new Prompt API, letting developers build AI features that run locally.

Why this is a big deal for app development

This isn't just Google tinkering; it's a direct shot in the AI arms race, especially against Apple. Apple seems focused on how it will use AI, whereas Google is handing developers the raw tools. Google is giving developers direct access to on-device AI with the Prompt API and cloud models like Nano Banana and Imagen through Firebase.

This is a smart approach as it lowers the barrier to entry for smaller devs (free translations, easier-to-read stats) while simultaneously handing high-end tools to advanced teams (on-device Nano, agent-based coding). It makes the entire Android ecosystem more competitive, not just the operating system itself.

Do you think adding more easy to use tools will help Google grow its Android developer community?

Yes

86.67%

No

13.33%

Google wants more developers to take advantage of these tools

Full disclosure: I'm not a developer myself. I'm not the one who's going to be plugging into the new Prompt API or using Agent Mode to upgrade APIs. But even from the outside, I can absolutely see how beneficial this entire suite of tools is.

Take the new Gemini-powered chart summaries. You don't need to be a coder to understand the value of an AI just telling you why your app's metrics are changing. That saves time and headaches for everyone, from a solo dev to a large studio. The same goes for the free, high-quality app translations; that’s a direct line to a global audience without the associated cost.

It’s no secret that Android needs a vibrant, growing developer community to thrive. These moves feel like Google is doing everything it can to roll out the red carpet. By making these powerful AI tools so easy to access and use, they're not just helping their current developers—they're building an incentive for new ones to join. This might be the best way Google can accomplish that goal, making Android the place to be for building what's next.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: