Google Assistant, Siri, Alexa and other digital helpers have a dangerous vulnerability (Videos)

We know that Amazon, Google, and Apple transcribe requests and questions asked of Alexa, Google Assistant, and Siri respectively. The companies say that this is done to improve these AI based helpers. If you think that this is the only privacy issue that you'll face with the virtual digital assistant on your phone, tablet or speaker, guess again. A paper published yesterday discusses the use of laser attacks on virtual assistants that can "inject" them with commands that cannot be heard or seen. The attack has been given the name "Light Commands" and is a vulnerability found on the small, high-quality MEMS (microelectro-mechanical systems) microphones that are used on speakers, phones and tablets. The reason this hack works is because the laser can be manipulated to trick the microphones inside a device into believing that it is receiving audio commands. In other words, the bad actor is essentially hijacking the voice assistant.

A Light Commands attack can lead to unauthorized on-line purchases"

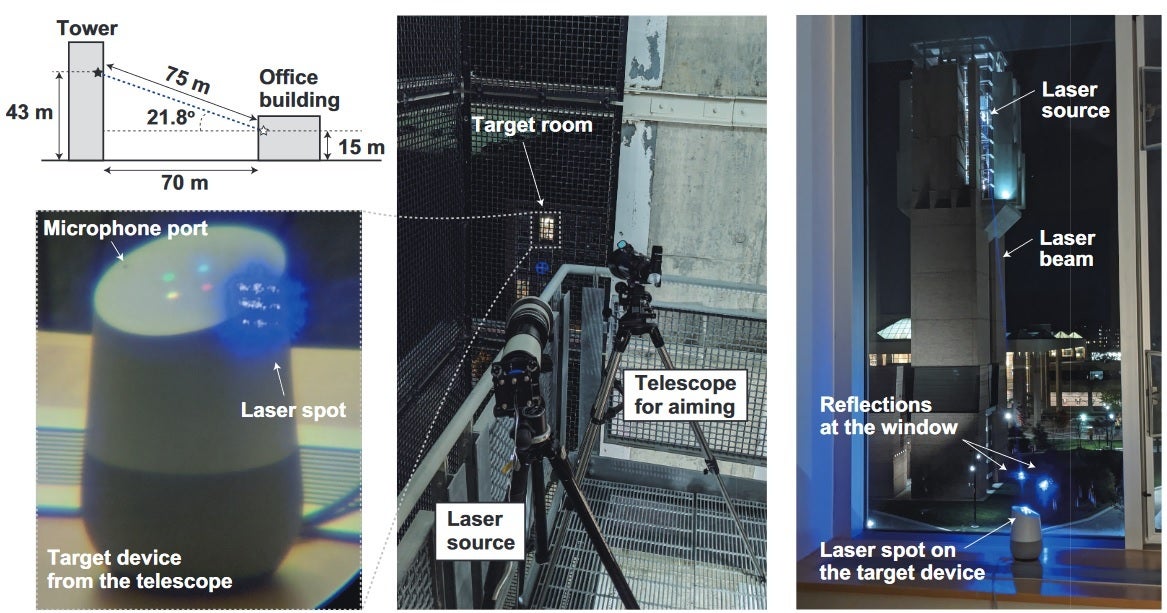

Putting together a Light Commands system is not expensive. All it takes is a laser pointer, a laser driver and a sound amplifier. A telephoto lens can be used for long-distance attacks. Altogether, such a setup could cost less than $581 with most of these items available from Amazon.

The report lists several devices that are susceptible to Light Commands and points out that "While we do not claim that our list of tested devices is exhaustive, we do argue that it does provide some intuition about the vulnerability of popular voice recognition systems to Light Commands." The devices listed include:

- Google Home (Google Assistant)

- Google Home mini (Google Assistant)

- Google NEST Cam IQ (Google Assistant)

- Echo Plus 1st Generation (Alexa)

- Echo Plus 2nd Generation (Alexa)

- Echo Amazon (Alexa)

- Echo Dot 2nd Generation (Alexa)

- Echo Dot 3rd Generation (Alexa)

- Echo Show 5 (Alexa)

- Echo Spot (Alexa)

- Facebook Portal Mini (Alexa)

- Fire Cube TV (Alexa)

- EchoBee 4 (Alexa)

- iPhone XR (Siri)

- iPad 6th Gen (Siri)

- Samsung Galaxy S9 (Google Assistant)

- Google Pixel 2 (Google Assistant)

The report notes that speaker recognition is usually disabled by default for smart speakers, but is enabled by default on handsets and tablets. But this security system only uses the recognition system to determine if the assistant's hotword ("Ok, Google," "Hey Siri," "Alexa") was spoken by the owner of the device. One the assistant is activated, the Light Commands can send out a fake request. And as the report points out, "speaker recognition for wake-up words is often weak and can be sometimes bypassed by an attacker using online text-to-speech synthesis tools for imitating the owner's voice."

Setting up a cross-building Light Commands attack

While those with a device under a Light Commands attack won't hear anything alerting them that something suspicious is happening, a user might be able to detect the light from the laser reflecting off of the target device. And while the report does point out how this vulnerability could be exploited, it does note that there are no indications that such an attack has been used in the wild. Just to make sure, it might be a good idea to make sure that your smart speaker isn't placed near a window that would give an attacker the clear line of sight needed to launch such an attack.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: