Only one smartphone assistant can be considered the best after crushing its rivals in a new test

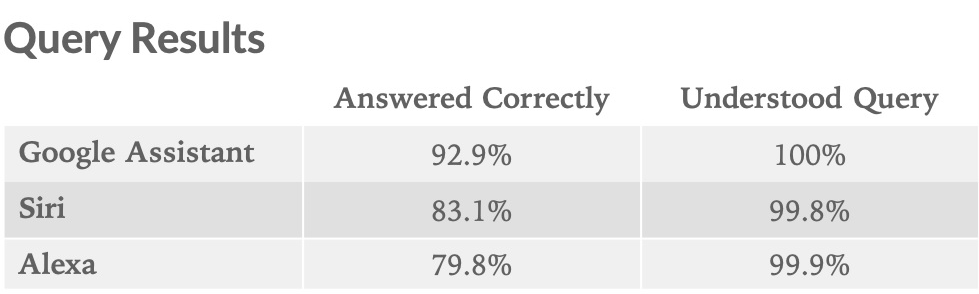

Venture Capital firm Loup Ventures recently tested the three major virtual digital assistants by running a test. The Google Assistant, Amazon's Alexa and Apple's Siri were each given the same 800 questions and tasks and scored on their ability to both understand the query and answer it or respond correctly. The results? Google Assistant understood all 800 questions asked and answered 93% of them correctly. Siri understood 99.8% of the questions and correctly responded 83.1% of the time. Alexa nearly matched Google Assistant's perfect comprehension with a score of 99.9% but did the worst in terms of accuracy with a score of 79.8%.

All three digital assistants improved over the scores they had last year when Google Assistant answered 86% of the questions right, Siri was correct 79% of the time and Alexa gave the right response 61% of the time. The report, written by analysts Gene Munster and Will Thompson, notes an important factor; this test, like the one last year, measures the response of these digital assistants on a smartphone instead of a smart speaker. This is important because the lack of a screen could change the response on a speaker as opposed to a phone. Since this test was made using phones, the questions were shorter and the use of a screen allowed the digital assistants to answer some questions without having to verbally announce the response.

Test shows that Google Assistant is the best digital helper found on a smartphone

"We separate smartphone-based digital assistants from smart speakers because, while the underlying technology is the same, use cases vary. For instance, the environment in which they are used may call for different language, and the output may change based on the form factor; e.g., screen or no screen. We account for this by adjusting the question set to reflect generally shorter queries and the presence of a screen which allows the assistant to present some information that is not verbalized."-Loup Ventures

Google Assistant is the top smartphone assistant according to Loup Ventures

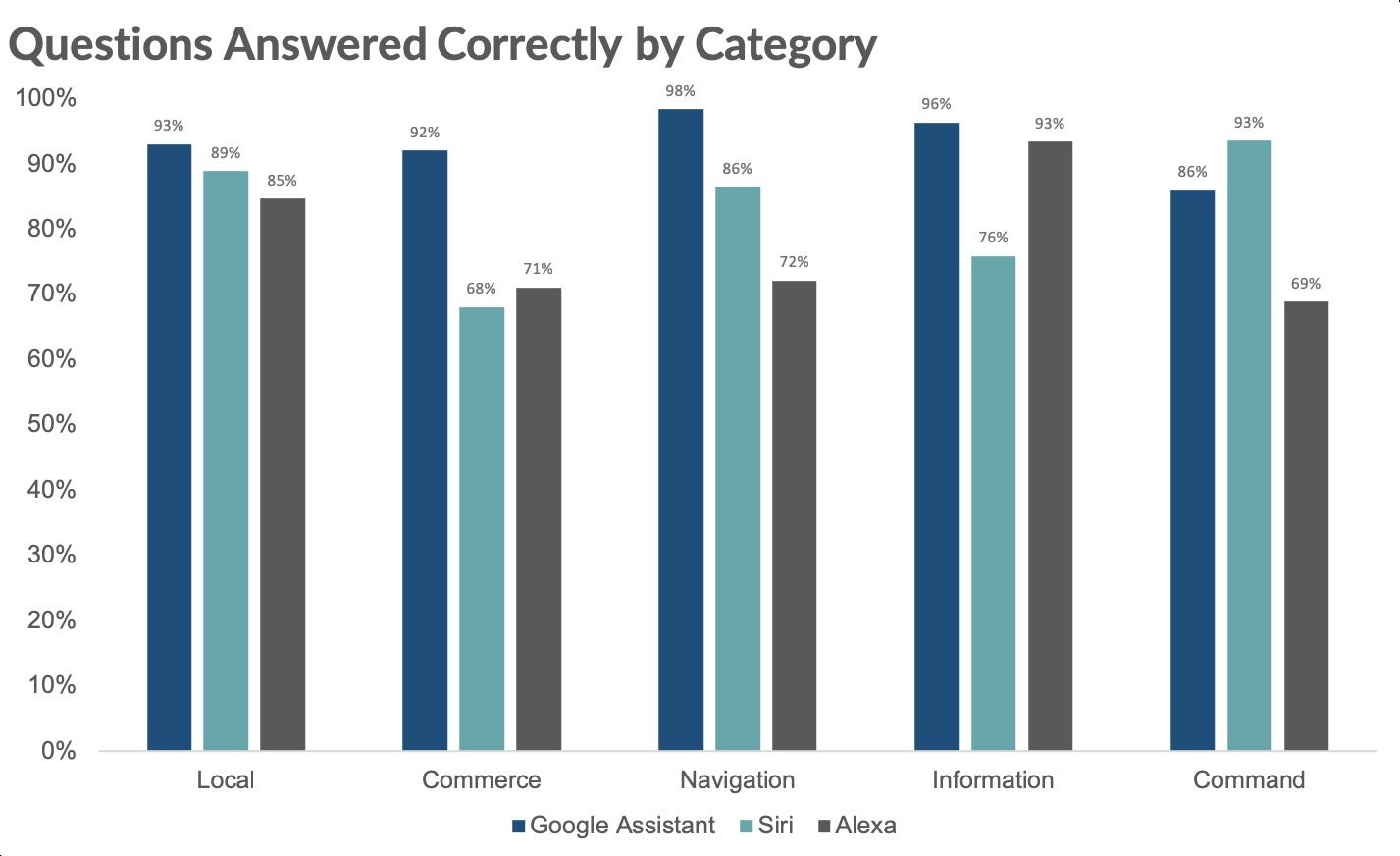

Each of the three digital assistants was asked questions broken down into five different categories: Local, Commerce, Navigation, Information and Command. Google Assistant had the best score for each one except for Command. The latter group of questions dealt with phone-related tasks such as email, texting, calendar, and music. That category was topped by Siri, which beat out Google Assistant by a score of 93% to 86%. Siri finished second in the Local category ("Where is the nearest book store?") and Navigation ("Which subway do I take to get downtown?") while finishing last in Commerce ("Order me a 24-pack of Coca-Cola and three boxes of M&M cones"). In that department, Alexa finished second while it also placed second in Information ("What time do the Yankees play tonight?"). Alexa, with its Amazon parentage, was the favorite in the Commerce department but was unable to top Google Assistant. Alexa also had the disadvantage of being an app rather than a native OS feature. This prevented it from doing well in the Command category.

Google Assistant had the best score in four out of five categories

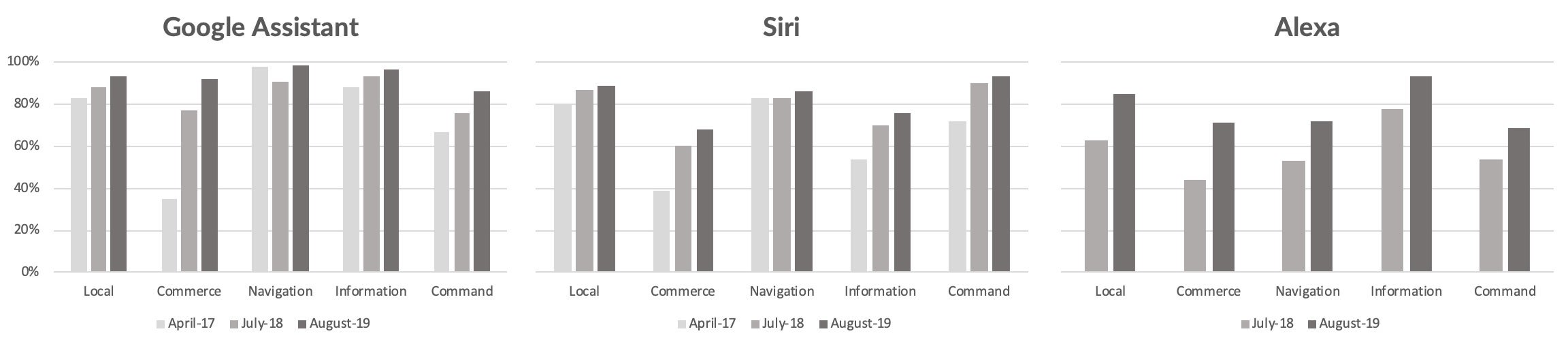

The test was conducted over an iPhone running iOS 12.4, a Pixel XL with Android 9 Pie installed, and Alexa was tested using the iOS app. Interestingly, while Alexa finished last in accuracy, Amazon's digital assistant showed an 18 percentage point improvement over the last 13 months, the best showing among the trio. Google Assistant had a 7 percentage point increase in its score over the same time period while Siri did 5 percentage points better.

Alexa improved its score by 18 percentage points over the last 13 months

Since the first test was conducted in April 2017, Google Assistant and Siri have improved the most in the Commerce category. Alexa, which wasn't part of the first test, has seen its greatest improvement over the last 13 months in the Commerce category also.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: