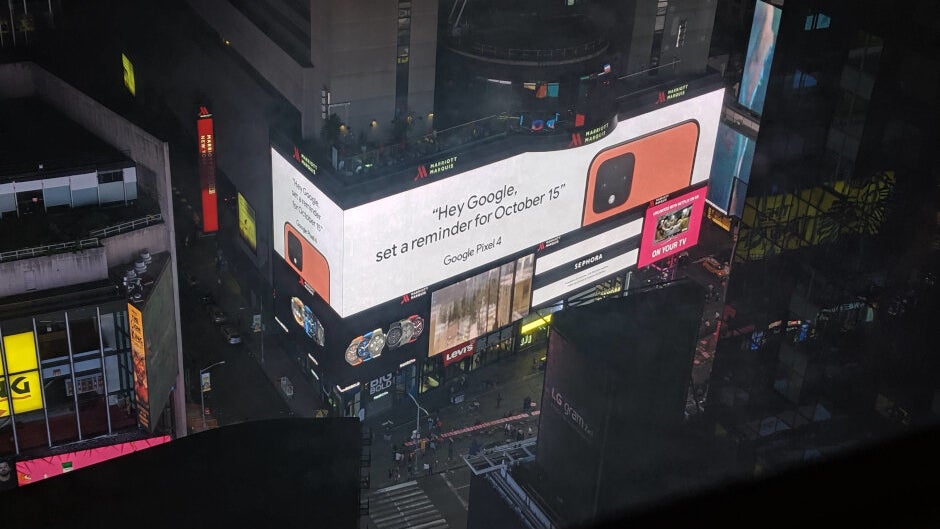

Google's temps told to find homeless and people with darker skin for Face unlock data

Back in July, Google released a video confirming that the new Pixel 4 series will be equipped with a more secure Face unlock feature. About a week before that video was disseminated, word got out about a project that the company had embarked on that related to a facial recognition system. To test and improve this more advanced Face unlock, Google visited major cities with a heavily disguised Pixel 4 handset; in exchange for a $5 Amazon or Starbucks gift card, contractors collected selfies and videos of random people. But this seemingly innocuous program actually had a racial undertone to it according to the New York Daily News.

Random subjects waiting in line to snag a $5 Starbucks gift card

Apparently, current facial recognition technology has had problems when identifying users with darker skin. To make sure that this does not become an issue with the Face unlock system that will debut on the Pixel 4 line, and future iterations of the feature, the database that Google has been creating targeted homeless people in Atlanta, those attending the BET Awards show in L.A., and college campuses around the U.S. This information comes from Daily News sources who worked on the project.

The temps collecting the data were told to focus on the homeless, college students and people with darker skin

The report noted that the temps that collected the database for Google were known as TVCs, an acronym indicating that these people were temps, contractors or vendors. The temps were paid through a third-party company called Randstand. And the temps indicated that Google used misleading methods to obtain these images. One of the TVCs told the newspaper that "We were told not to tell (people) that it was video, even though it would say on the screen that a video was taken. If the person were to look at that screen after the task had been completed, and say, 'Oh, was it taking a video?’… we were instructed to say, ‘Oh it’s not really.'"

"We regularly conduct volunteer research studies. For recent studies involving the collection of face samples for machine learning training, there are two goals. First, we want to build fairness into Pixel 4’s face unlock feature. It’s critical we have a diverse sample, which is an important part of building an inclusive product."-Google

The temps were taught to rush their subjects through survey questions, and to walk away if anyone got too suspicious. They were also told to target the homeless because they were less likely to approach the media about the program. The temps were also told to target college students because they live on a tight budget and would be interested in the $5 Starbucks card. As one of the TVCs said, "They (Google) were very aware of all the ways you could incentivize a person and really hone in on the context of the person to make it almost irresistible." Another temp said, "I feel like they wanted us to prey on the weak."

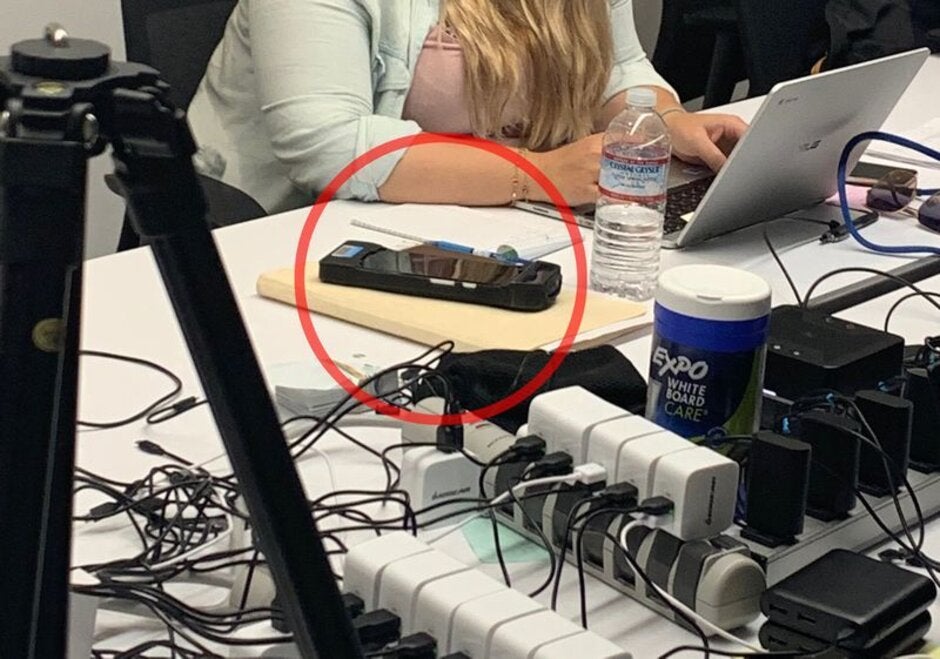

A heavily disguised Pixel 4 collects information to improve Face unlock

And here is what their spiel sounded like to those lending their face and personal information to the project. 18-year old Kelly Yam recalled the whole pitch used on her. "They just said, 'Do you want to enter a survey? We’ll give you a Starbucks gift card. We had to follow a dot with our nose. I did the action of holding the phone up to my face. Maybe next time I'll be more aware. I was mostly tempted by the gift card. They said it was a survey and we thought they were students. I don’t think I even realized there was a consent form."

Another look at one of the devices used to collect images

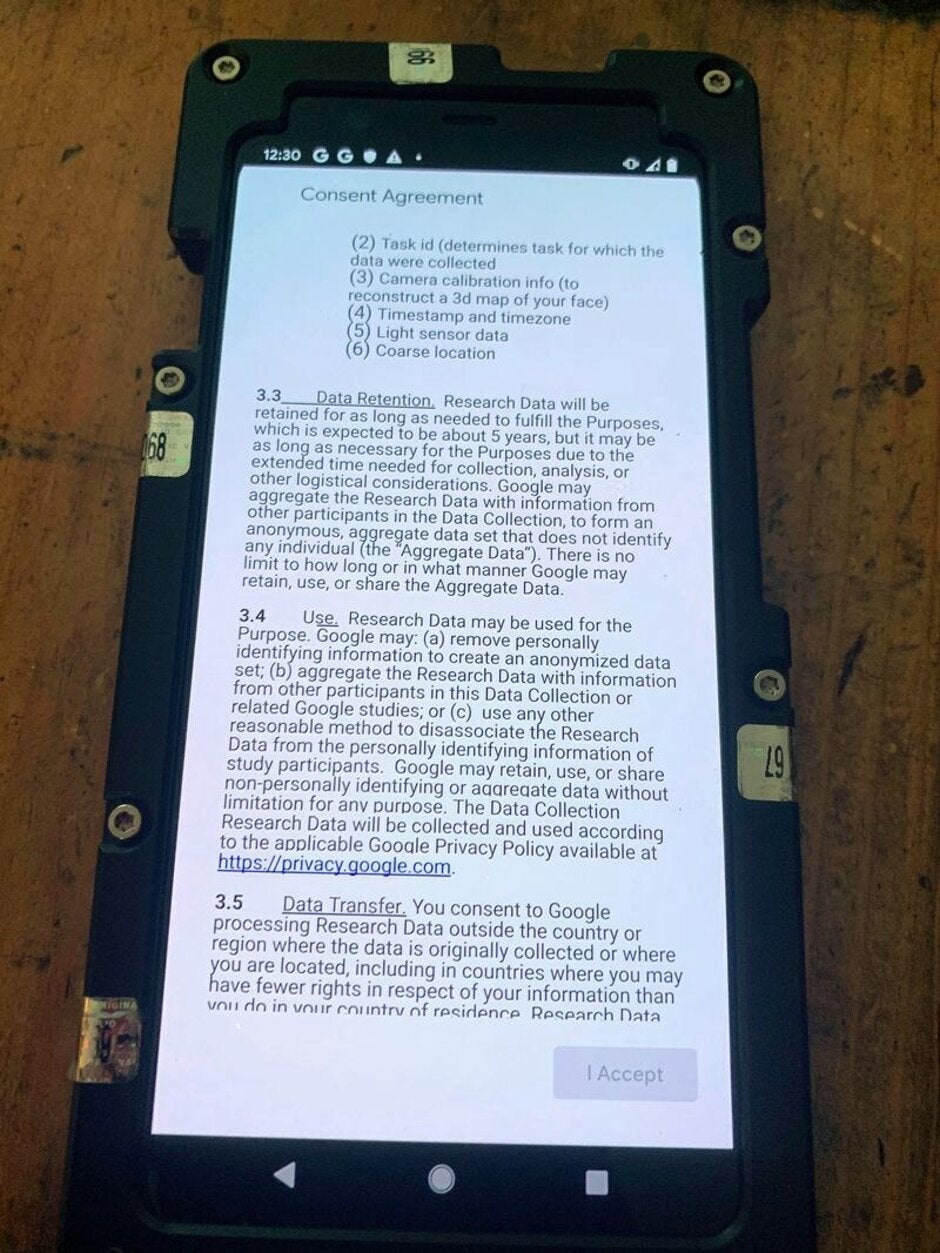

The agreement form that the subjects were supposed to sign noted that Google could keep the image of their face and any other information "as long as needed to fulfill the purposes which is expected to be about five years." It also noted that Google could aggregate the research data, which would make those participating anonymous. However, under the agreement, "there is no limit to how long or in what manner Google may retain, use or share the aggregate data." The agreement adds that Google can data retain, use or share non-personally identifying or aggregate data without limitation for any purpose."

The agreement that subjects needed to sign to receive their $5 card

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: