Game-changing AI in iPhone 15 will talk in your voice but can it get you kidnapped too?

This article may contain personal views and opinion from the author.

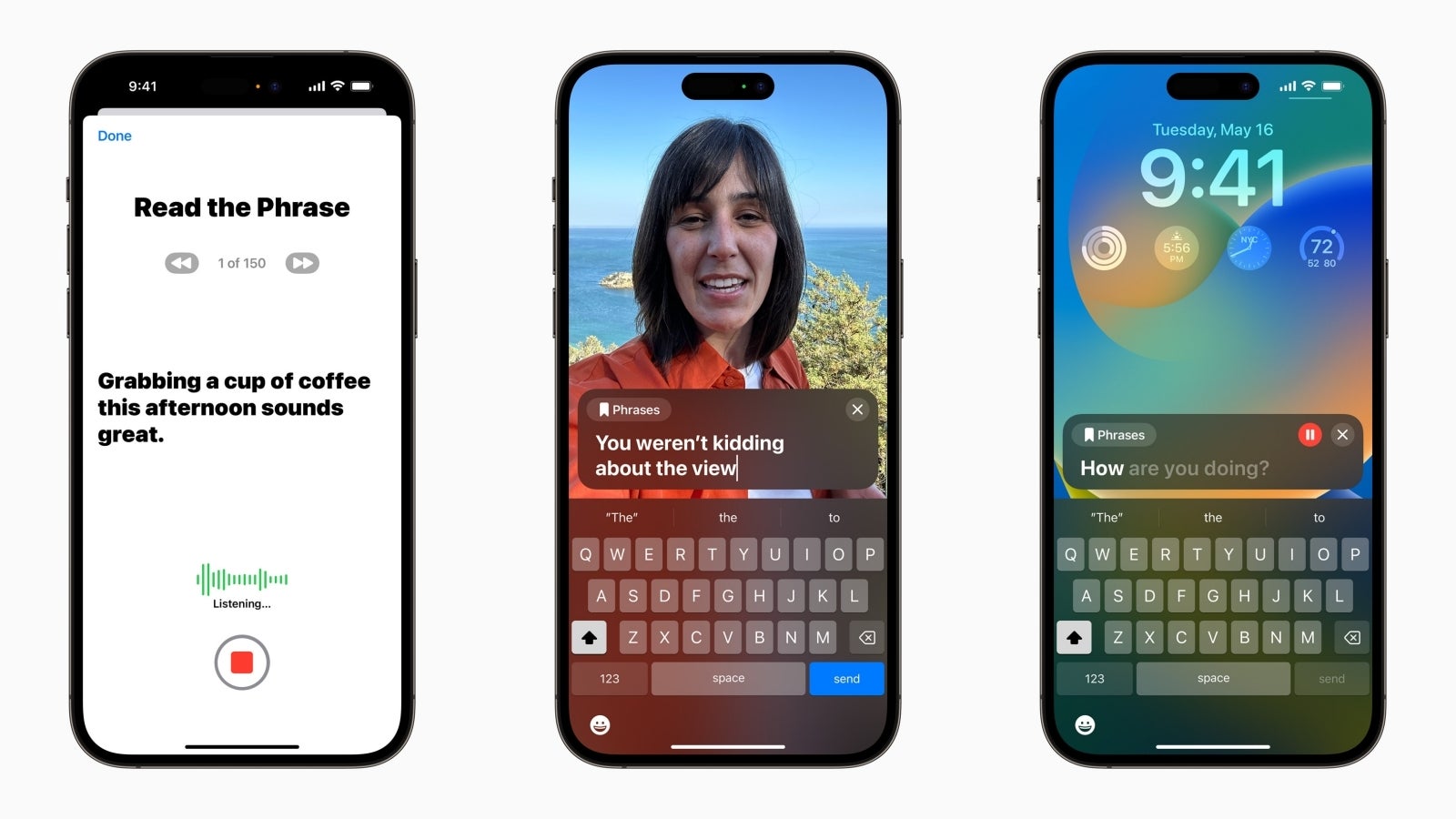

Users can create a Personal Voice by reading along with a randomized set of text prompts to record 15 minutes of audio on iPhone or iPad. This speech accessibility feature uses on-device machine learning to keep users’ information private and secure, and integrates seamlessly with Live Speech so users can speak with their Personal Voice when connecting with loved ones.

Apple

It should come as no surprise that Apple is approaching the Personal Voice AI feature from an angle of accessibility, which is said to be the key objective here. Cupertino has a solid track record of going above and beyond to make the iPhone more inclusive. However, this time around, many are also concerned about their privacy, and ultimate security.

So, should we be freaked out about the fact that the iPhone will soon be able to speak in our own voice? I don’t think so. If anything, I’m quite excited!

Scary but helpful - iPhones running iOS 17 will be able to talk in your “Personal Voice”; Apple enters the advanced AI race in the smartest way possible

I don’t know about you but I think Apple is being quite careful about entering the AI race, as accessibility might be one of the safest options when it comes to rationalizing the need for AI in iPhones and iPads. However, this doesn’t mean Apple has chosen an easy path.

Of course, no one’s had the chance to test Personal Voice just yet, so I’ll have to reserve any strong opinions for when the feature is released (it’s expected at the end of this year). But what we can do right now is to talk about the positive nature of the advanced AI coming to iPhone. And what a better way to make a positive impact than helping people get through life.

Unfortunately, it’s difficult to find global statistics of this kind, but according to those available in the US, approximately 18.5 million individuals have a speech, voice, or language disorder, which shows the clear need for making technology work for those who could benefit the most out of it.

That’s the moment to mention that rather than breaking new ground, Apple is simply tapping into the already existing world of Augmentative and Alternative Communication (AAC). AAC apps are designed to help nonspeaking people communicate more effectively through the use of symbols and predictive keyboards that produce speech. Many who are unable to produce oral speech, including those with ALS, cerebral palsy, and autism have to use AAC apps to communicate.

AssistiveWare’s founder and CEO David Niemeijer hopes AAC apps like Proloquo2Go become as widely accepted as texting. “If you can’t speak, the assumption is still that you probably don’t have much to say. That assumption is the biggest problem. I hope to see a shift toward respecting this technology so it can have the biggest impact”, says Niemeijer. Bear in mind that while some AAC apps are free, the Premium version of Proloquo2Go currently costs $250 to download from the App Store.

Apple's Personal Voice - a game-changing feature that makes smartphones smart and our lives easier?

I believe this makes it a little bit more clear as to why Apple’s work towards making the iPhone and iPad more accessible should be the main talking point of a feature like Personal Voice. In a world of TikTok videos and Instagram stories, accessibility and Quality of Life (QoL) features like Personal Voice are a reminder that smartphones can (and should) exist to make our lives easier.

So, the fact that Apple’s Personal Voice will live directly on iPhone, even without the need for any special software, would make using this (hopefully) game-changing piece of AI that much more accessible and “normal”.

Coming later this year, users with cognitive disabilities can use iPhone and iPad with greater ease and independence with Assistive Access; nonspeaking individuals can type to speak during calls and conversations with Live Speech; and those at risk of losing their ability to speak can use Personal Voice to create a synthesized voice that sounds like them for connecting with family and friends.

For users at risk of losing their ability to speak - such as those with a recent diagnosis of ALS (amyotrophic lateral sclerosis) or other conditions that can progressively impact speaking ability - Personal Voice is a simple and secure way to create a voice that sounds like them.

Personal Voice might be the new, improved, and supercharged version of Siri - can Apple’s most ambitious accessibility feature turn into the ultimate Google Assistant competitor?

What if Personal Voice is Apple’s hint that Siri will soon receive its biggest upgrade ever?

Now all that being said, as a “tech person”, I simply can’t help but look at Personal Voice’s extended potential. And let me explain what I mean by that...

By the looks of it Personal Voice is essentially shaping up to be a text-to-speech engine, which can be useful in a number of different scenarios. I’d love to see a feature like Personal Voice expand to other iPhone and iPad apps like Voice Memos and Notes. I’m saying that, because finding a good text-to-speech piece of software that’s both free and natural-sounding is next to impossible.

- Student preparing for an exam

- Podcasters who hates reading boring ads

- Comedians trying to memorize a comedy set

- Actors trying to learn a script

I know… My imagination is running a bit wild here but I really think artists and the general public can make great use of a broader implementation of a feature like Personal Voice. The aforementioned examples might look funny, considering the current mission of Personal Voice, but I really do believe this is only the start of Apple’s AI transformation.

I’m overreaching but I’m also asking myself whether Personal Voice could be the start of something even bigger, and far more controversial. Like the idea of the Metaverse and how Voice banking can make us “immortal”. Voice banking is a process that allows someone to create a synthetic voice that ideally sounds like their natural voice. It’s achieved by recording a large number of messages when your voice is clear.

So, what if your voice can be preserved forever and/or combined with a virtual image of you, which can stay on after you’re “gone” gone? If this sounds fascinating to you, I recommend watching a brilliant show called “Upload”. It’s a sci-fi comedy-drama, which explores the idea of humans being able to "upload" themselves into a virtual afterlife of their choosing in the year 2033. That’s only ten years from now, folks!

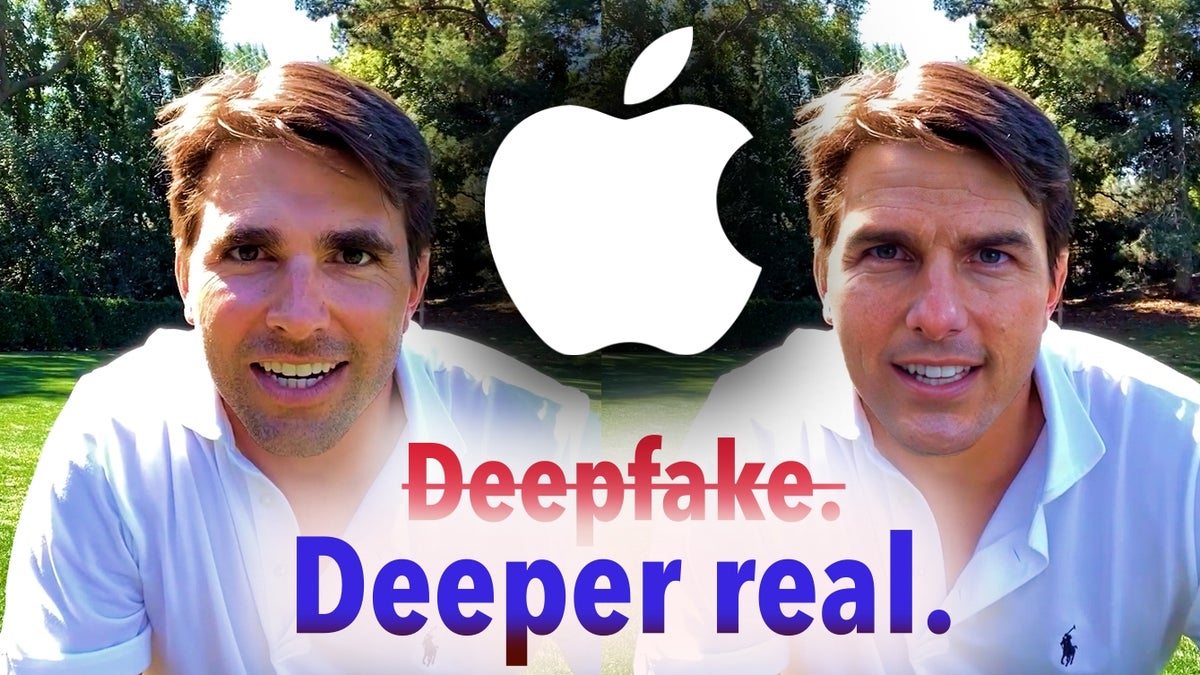

iPhone and iPad will be able to speak in your voice: Is Apple opening the door to scammers? Concerns over Virtual Kidnapping and Deepfakes arise

We believe in a world where everyone has the power to connect and communicate. To pursue their passions and discover new ones. On Global Accessibility Awareness Day (May 19), we celebrate inclusive technology that works for everyone.

Tim Cook

Now, about the controversial sides of Apple’s Personal Voice…

Still, Apple’s promise for simple and secure AI doesn’t stop people from voicing their concerns over the possibility of potential abuse of the powerful accessibility feature by bad actors and “pranksters''. Social media users are already thinking of the various ways Personal Voice can be turned into something else than a helpful feature:

- Petty scams

- Virtual kidnapping

- Deceptive voice messages/recordings

- Pranks that step over the limits

One that stands out in particular (thanks to being discussed by big news outlets) is Virtual Kidnapping, which is a telephone scam that takes on many forms. This is essentially an extortion scheme that tricks victims into paying a ransom to free a loved one they believe is being threatened with violence or death. The twist? “Unlike traditional abductions, virtual kidnappers have not actually kidnapped anyone. Instead, through deceptions and threats, they coerce victims to pay a quick ransom before the scheme falls apart”, says the FBI.

I recommend a fascinating (but still fun) episode of the Armchair Expert podcast, where people call Dax Shepard to tell him about the time when they were scammed. The virtual kidnapping story was intense but very insightful.

So, what I say is… Perhaps we should try and focus on the positive side of Personal Voice and all other AI and ML-powered accessibility features, which can help those in need? I’d leave the suspicion for later. Meanwhile, you can learn everything about Apple’s new features for cognitive accessibility, along with Live Speech, Personal Voice, and Point and Speak in Magnifier via the company’s blog post.

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: