Google used machine learning to improve the portrait mode on the Pixel 3 and Pixel 3 XL

The Pixel 3 and Pixel 3 XL both have one of the best camera systems found on a smartphone today. Yet, Google makes it work with just one camera on the back of both phones. Even without a second camera on back, the phone still produces the bokeh effect in portrait mode thanks to the use of software and other processing tricks. In a blog post published today by Google, the company explains how it can predict depth on the Pixel 3 without the use of a second camera.

Last year's Pixel 2 and Pixel 2 XL used Phase Detection Autofocus (PDAF), also known as dual-pixel auto focus, along with a "traditional non-learned stereo algorithm" to take portraits on the second-gen Pixels. PDAF captures two slightly different views of the same scene and creates a parallax effect. This is used to create a depth map required to achieve the bokeh effect. And while the 2017 models take great portraits with a background blur that can be made weaker or stronger, Google wanted to improve portrait mode for the Pixel 3 and Pixel 3 XL.

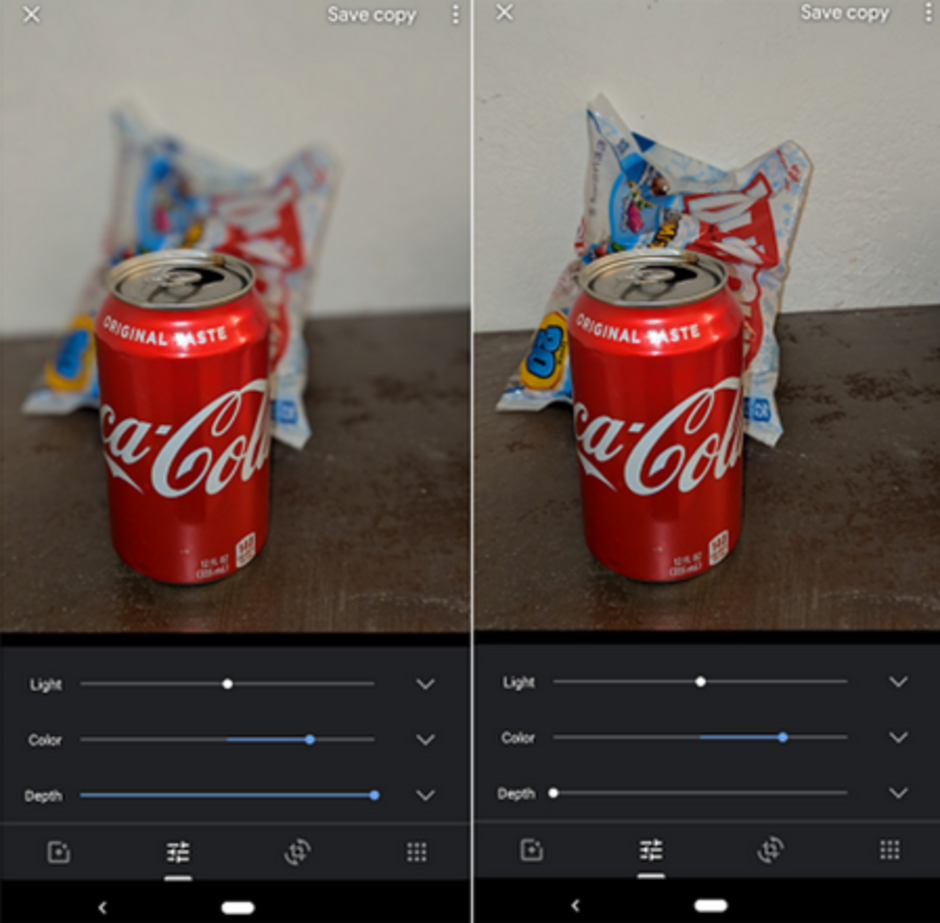

Google Photo app version 6.1 or later allows Pixel users to weaken or intensify the bokeh effect on a portrait

While using PDAF works okay, there are factors that could lead to errors when it comes to estimating depth. To improve the depth estimation with the Pixel 3 models, Google added some new cues including the comparison of out-of-focus images in the background with sharply focused images that are closer. That is known as the defocus depth cue. Counting the number of pixels in an image of a person's face helps give an estimate to how far away that person is from the camera. That is known as a semantic cue. Google needed to use machine learning to help create an algorithm that would allow Google to combine the cues for a more accurate depth estimate. To do this, the company needed to train the neural network.

Training the network required plenty of PDAF images and high-quality depth maps. So Google created a case that fit five Pixel 3 phones at once. Using Wi-Fi, the company captured the pictures from all five cameras at the same time (or within approximately 2 milliseconds of each other). The five different viewpoints allowed Google to create parallax in five different directions, helping to create more accurate depth information.

"This (machine learning)-based depth estimation needs to run fast on the Pixel 3, so that users don’t have to wait too long for their Portrait Mode shots. However, to get good depth estimates that makes use of subtle defocus and parallax cues, we have to feed full resolution, multi-megapixel PDAF images into the network. To ensure fast results, we use TensorFlow Lite, a cross-platform solution for running machine learning models on mobile and embedded devices and the Pixel 3’s powerful GPU to compute depth quickly despite our abnormally large inputs. We then combine the resulting depth estimates with masks from our person segmentation neural network to produce beautiful Portrait Mode results."-Google

This five Pixel 3 case allows Google to collect data to train the neural network required for more accurate depth estimates

Google continues to use the Pixel cameras to market the phones. A series of videos called the 'Unswitchables' shows various phone owners testing out the Pixel 3 to see if they will eventually switch from their current handsets. At first, most of these people say that they would never switch, but are won over by the end of each episode by the camera and some of Google's features.

Things that are NOT allowed: