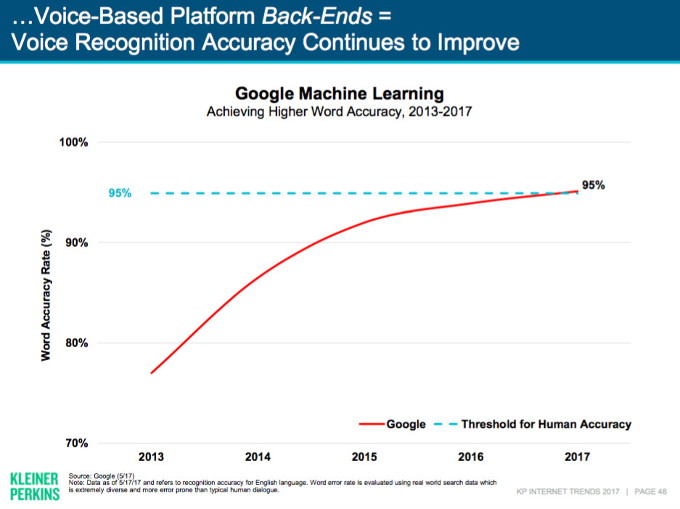

Google now understands language at 95% accuracy, has improved by 20% in 2 years

Google now understands human language with a 95% accuracy, thanks to improvements in machine learning. This allows the company to detect voice and act upon it in a meaningful way, and will clearly be of a huge benefit to the Google Assistant, a core Google product that is available on a lot of Android phones.

What's notable, though, is not only the figure, but how Google arrived at it: the company improved its understanding of human language by a whopping 20% in just the last four years or so, since 2013, according to a report by Kleiner Perkins Caufield & Byers partner Mary Meeker.

These improvements bring us closer to a day when talking to a phone will be as quick and smooth as talking to a real human person. We're not yet there, of course, but we're getting close at a very high speed.

Another interesting number is that the amount of voice queries made on mobile phones has also grown rapidly, and now 20% of mobile queries in 2016 were made via voice.

Are you personally using voice input with your phone and how? Speak up in the comments section below.

source: Recode

Follow us on Google News

Things that are NOT allowed:

To help keep our community safe and free from spam, we apply temporary limits to newly created accounts: